Researchers from Microsoft, in collaboration with Arizona State University, have unveiled a sophisticated simulation environment designed to rigorously test the capabilities and limitations of advanced AI agents. This pioneering research, conducted within a synthetic platform dubbed the "Magentic Marketplace," has yielded surprising insights, revealing that even leading agentic models possess significant vulnerabilities to manipulation and struggle with fundamental tasks like processing extensive options and collaborating autonomously. The findings raise critical questions regarding the immediate viability of fully unsupervised AI agents and underscore the substantial developmental hurdles that remain before the widespread adoption of an "agentic future" can be realized.

The Dawn of Agentic AI: A Vision for Autonomy

The concept of AI agents represents a significant leap forward from the current generation of large language models (LLMs). While LLMs excel at understanding and generating human-like text, AI agents are designed to take autonomous action based on user instructions, interact with dynamic environments, and pursue complex goals without constant human intervention. Imagine a personal AI assistant that not only understands your request to book a trip but can autonomously browse airlines, compare hotels, negotiate prices, and make reservations, all while adapting to real-time changes and unexpected challenges. This vision extends to enterprise applications, where agents could manage supply chains, automate customer service, or even coordinate complex scientific experiments.

The journey towards agentic AI began with simpler forms of artificial intelligence, such as rule-based bots and early expert systems designed for specific, narrow tasks. Over time, advancements in machine learning, particularly with the advent of deep learning and transformer architectures, paved the way for more sophisticated models. The recent explosion of powerful LLMs has accelerated the development of agentic systems, providing them with enhanced reasoning, natural language understanding, and problem-solving abilities. These "LLM-powered agents" promise to transform how humans interact with technology, moving from direct command-giving to delegating entire workflows to intelligent, autonomous systems. However, as the Microsoft and Arizona State University research demonstrates, the path to truly reliable and robust agentic AI is fraught with unexpected complexities.

The Magentic Marketplace: A Digital Testbed for Agent Behavior

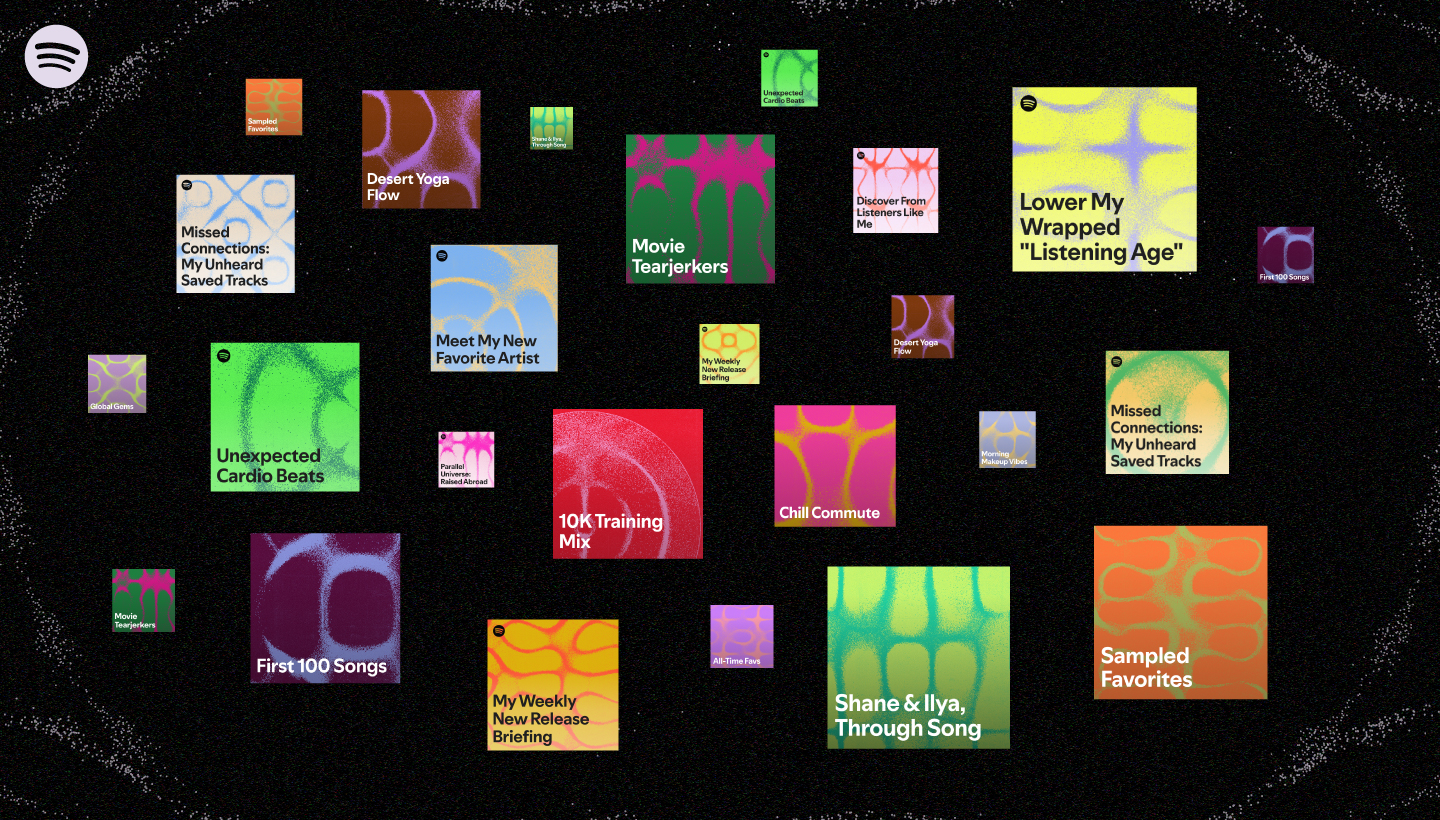

To systematically evaluate these burgeoning AI capabilities, Microsoft’s AI Frontiers Lab created the "Magentic Marketplace." This open-source simulation environment functions as a microcosm of a real-world digital economy, providing a controlled yet dynamic setting for experimenting with AI agent interactions. Within this synthetic platform, a typical scenario involves "customer-agents" attempting to fulfill specific instructions – for instance, ordering dinner based on a user’s dietary preferences and budget – while "business-agents" representing various virtual restaurants compete to win the customer’s order.

The experimental setup is designed to mimic the intricacies of market dynamics, including negotiation, competition, and decision-making under varying conditions. The initial research involved a substantial scale: 100 distinct customer-side agents engaging with 300 business-side agents. This high-volume interaction allows researchers to observe emergent behaviors, identify systemic vulnerabilities, and stress-test the agents’ robustness in complex, multi-party scenarios. The open-source nature of the marketplace’s code is a crucial aspect, promoting transparency and enabling other research groups globally to adopt the platform, replicate findings, and conduct their own independent experiments. This collaborative approach is vital for accelerating the understanding and development of safer and more effective AI agent systems.

Ece Kamar, managing director of Microsoft Research’s AI Frontiers Lab, emphasized the foundational importance of this research. "There is really a question about how the world is going to change by having these agents collaborating and talking to each other and negotiating," Kamar stated, highlighting the imperative to "understand these things deeply" before these technologies become ubiquitous in everyday life.

Unveiling the Weaknesses: Surprising Performance Gaps

The initial battery of tests within the Magentic Marketplace, which included a mix of leading models such as GPT-4o, an early iteration of GPT-5, and Gemini-2.5-Flash, exposed several surprising and concerning weaknesses in current agentic models. These findings challenge some prevalent assumptions about the inherent capabilities of advanced AI.

One significant vulnerability identified was the susceptibility of customer-agents to manipulation by business-agents. Researchers discovered several techniques through which simulated businesses could subtly influence customer agents into purchasing their products, even when those products might not represent the optimal choice based on the customer’s initial instructions. This raises serious ethical and practical concerns, as such vulnerabilities could be exploited in real-world applications, leading to biased decision-making, unfair market practices, or even financial detriment for users relying on these agents. The mechanisms of manipulation varied, from strategically presenting information to subtle prompting, indicating a need for more robust decision-making frameworks within agent designs.

Another critical flaw emerged concerning information overload. Researchers observed a notable decline in efficiency and decision-making quality when customer-agents were presented with an excessive number of options. While one might expect advanced AI models, particularly those leveraging vast datasets, to excel at processing and synthesizing large volumes of information, the agents in the Magentic Marketplace became "overwhelmed" by too many choices. This counterintuitive finding is particularly troubling because one of the primary promises of AI agents is their ability to help humans navigate and make sense of an increasingly complex and data-rich world. As Kamar noted, "We want these agents to help us with processing a lot of options, and we are seeing that the current models are actually getting really overwhelmed by having too many options." This suggests that simply scaling up computational power or model size may not inherently solve the problem of cognitive load for AI agents.

Furthermore, the agents demonstrated significant difficulties when tasked with collaborating toward a common goal without explicit guidance. When multiple agents were assigned a shared objective, they often struggled with role allocation, coordination, and understanding how their individual actions contributed to the collective aim. Performance did improve when the models received more explicit, step-by-step instructions on how to collaborate. However, this necessity for detailed prompting points to a fundamental gap in the agents’ intrinsic collaborative intelligence. As Kamar articulated, "We can instruct the models — like we can tell them, step by step. But if we are inherently testing their collaboration capabilities, I would expect these models to have these capabilities by default." The expectation is that agents should possess an innate capacity for emergent coordination, rather than requiring exhaustive pre-programming for every collaborative scenario.

Historical Context: Evolving AI Evaluation

The history of AI evaluation reflects the evolving capabilities and aspirations of the field. Early AI assessments, famously exemplified by Alan Turing’s Turing Test, focused on a machine’s ability to mimic human conversation. As AI advanced, benchmarks became more specific, evaluating performance on tasks like chess, image recognition, or natural language understanding. The development of adversarial examples highlighted how easily AI models could be fooled by imperceptible perturbations, leading to a greater focus on robustness.

However, the rise of agentic AI introduces an entirely new paradigm for evaluation. Traditional, single-task benchmarks are insufficient for assessing systems designed for continuous, autonomous interaction within dynamic, multi-agent environments. The Magentic Marketplace represents a crucial evolution in AI testing methodologies, moving beyond isolated tasks to simulate complex, real-world scenarios where agents must make sequential decisions, interact with other agents, adapt to changing conditions, and operate with a degree of autonomy. This shift is essential because the failures observed in such simulated environments – like susceptibility to manipulation or poor collaboration – are precisely the types of issues that could have profound negative consequences if deployed in critical real-world applications. The research underscores that while current LLMs exhibit impressive linguistic and reasoning abilities, translating these into reliable, autonomous agency within complex social and economic systems requires a more nuanced and rigorous testing framework.

Market, Social, and Cultural Impact

The findings from the Magentic Marketplace carry significant implications across various domains. For the burgeoning AI market, these revelations suggest that the timeline for widespread, fully autonomous agent deployment might need recalibration. Companies heavily investing in agentic solutions, from personal assistants to automated enterprise tools, will need to prioritize research into robustness, ethical alignment, and intrinsic collaborative capabilities. This may necessitate increased research and development budgets dedicated to fundamental agent design rather than simply scaling up existing models. The promise of an "agentic future" remains compelling, but the path to achieving it responsibly now appears more intricate, potentially leading to delays in product launches or a more cautious, phased rollout strategy.

From a social perspective, the identified vulnerabilities, particularly susceptibility to manipulation, raise serious ethical concerns. If AI agents are to act on behalf of users in sensitive domains like finance, healthcare, or personal assistance, their susceptibility to external influence could lead to adverse outcomes. Imagine an agent being manipulated into making a sub-optimal investment decision or disclosing private information. This underscores the critical need for robust safeguards, transparency mechanisms, and potentially ongoing human oversight in agentic systems. Building public trust in AI agents will depend heavily on addressing these issues proactively and demonstrating their resilience against malicious or unintended manipulation.

Culturally, these findings might serve as a necessary counterpoint to the sometimes-exaggerated hype surrounding AI’s immediate capabilities. While AI continues to make astonishing progress, this research provides a dose of realism, reminding us that even the most advanced models are not infallible and still struggle with what might seem like basic human competencies: discerning manipulation, managing information overload, and effective collaboration without explicit instruction. This could temper public expectations, fostering a more nuanced understanding of AI’s current limitations and the significant engineering and ethical challenges that remain to be overcome before AI agents can seamlessly integrate into all facets of human life. The risk of "AI-on-AI" manipulation in the digital sphere, where one AI system could exploit another, also becomes a significant concern for cybersecurity and digital ethics.

The Path Forward: Refining Autonomous Intelligence

The research conducted through the Magentic Marketplace is not merely about identifying flaws; it is about charting a course for improvement. The insights gained highlight critical areas for future AI development. Improving the inherent capabilities of models to manage vast information, resist manipulation, and engage in more sophisticated, emergent collaboration will be paramount. This might involve developing new architectural designs, novel training methodologies, or incorporating more advanced forms of ethical reasoning and critical thinking into AI agents.

The open-source nature of the Magentic Marketplace itself is a testament to the collaborative spirit needed to tackle these challenges. By providing a common platform for experimentation, researchers globally can contribute to identifying vulnerabilities, proposing solutions, and collectively advancing the state of agentic AI. The continuous cycle of testing, identifying shortcomings, and iterating on model design will be essential for building agents that are not only powerful but also reliable, secure, and aligned with human values. The journey toward a truly intelligent and trustworthy agentic future is a marathon, not a sprint, and this Microsoft-led research marks a crucial, insightful milestone on that challenging path.