A significant development in the artificial intelligence infrastructure landscape has seen the popular open-source tool SGLang evolve into a venture-backed commercial entity, RadixArk, reportedly achieving a valuation of approximately $400 million. This strategic move, confirmed by sources familiar with the matter and led by investment firm Accel, underscores a burgeoning trend where foundational AI technologies developed in academic settings transition into high-value startups to meet the escalating demands of the AI market. RadixArk’s core mission is to enhance the speed and cost-efficiency of AI models during their operational phase, known as inference.

The Crucial Role of AI Inference

Understanding the importance of RadixArk necessitates a grasp of AI inference. In the lifecycle of an artificial intelligence model, there are two primary phases: training and inference. Training involves feeding vast datasets to a model, allowing it to learn patterns and make predictions. This phase is computationally intensive, often requiring powerful and expensive hardware like specialized GPUs for extended periods. Once a model is trained, it enters the inference phase, where it applies its learned knowledge to new, unseen data to generate outputs or make decisions. This is when AI models are actually "used" by applications, from generating text in a chatbot to recognizing objects in a self-driving car.

As AI models, particularly large language models (LLMs), have grown exponentially in size and complexity, the computational requirements for inference have also skyrocketed. While training costs are immense, inference costs represent the continuous operational expenditure. Every query, every generated sentence, every image processed incurs a cost. Optimizing this process means models can run faster, respond in real-time, and serve more users on the same hardware, directly translating into substantial financial savings and improved user experiences for businesses deploying AI. Tools like SGLang and now RadixArk are designed to make these sophisticated AI models accessible and economically viable for a wider range of applications and enterprises.

From Academia to Enterprise: A Proven Model

The genesis of RadixArk, tracing back to the SGLang project within the esteemed UC Berkeley lab of Databricks co-founder Ion Stoica, exemplifies a well-established pathway for groundbreaking technology. Professor Stoica is a renowned figure in the computer science world, celebrated for his pivotal contributions to distributed systems and data processing. His involvement in co-founding Databricks, a company that commercialized the Apache Spark open-source project, serves as a powerful precedent. Spark, initially developed at UC Berkeley’s AMPLab, revolutionized big data processing, and Databricks built a multi-billion dollar business around its enterprise adoption. This history provides significant credibility to ventures emerging from Stoica’s research group, suggesting a deep understanding of translating complex research into practical, scalable commercial products.

This model of nurturing open-source projects in academic environments and then spinning them out as commercial entities offers several advantages. It allows for rapid innovation and community contribution during the early, exploratory stages. Open-source development fosters collaboration, attracts diverse talent, and enables broad adoption without immediate commercial pressures. However, to scale, provide dedicated support, and develop advanced features, a commercial entity often becomes necessary. This transition typically involves attracting venture capital, hiring dedicated teams, and establishing a business model around the open-source core. RadixArk’s emergence from SGLang perfectly illustrates this successful evolution, leveraging the robust foundation built within an academic research setting to address a critical industry need.

RadixArk’s Genesis and Leadership

The SGLang project initially began its life within Ion Stoica’s renowned UC Berkeley lab in 2023. Its rapid evolution into RadixArk, a commercial startup, highlights the urgent market demand for its capabilities. A key figure in this transformation is Ying Sheng, who now serves as the co-founder and CEO of RadixArk. Sheng’s background is particularly notable; she was a core contributor to SGLang and previously held an engineering position at xAI, Elon Musk’s AI startup. Her prior experience also includes a role as a research scientist at Databricks, further connecting her to the ecosystem fostered by Professor Stoica. This blend of academic rigor, open-source development, and industry experience positions her uniquely to lead RadixArk through its commercialization phase.

The transition of key developers and maintainers from the SGLang project to the newly launched commercial startup ensures continuity and deep expertise. This team is now tasked with not only advancing the open-source SGLang AI model engine but also developing new offerings. For instance, RadixArk is reportedly engineering Miles, a dedicated framework specifically designed for reinforcement learning, a branch of AI focused on training models to make sequential decisions through trial and error. While the underlying SGLang tool continues to be available as an open-source resource, RadixArk has already commenced offering fee-based hosting solutions, marking its initial steps toward commercial monetization. The company also secured early-stage angel capital from influential investors, including Intel CEO Lip-Bu Tan, signaling early confidence from industry leaders. Representatives for RadixArk’s Ying Sheng, Accel, and Lip-Bu Tan did not provide comment when contacted regarding these developments.

A Broader Market Trend: The Race for Inference Optimization

RadixArk’s journey mirrors that of other successful open-source initiatives in the AI space, signaling a broader, significant trend. A notable parallel is vLLM, a comparably advanced project also focused on inference optimization, which originated from the very same UC Berkeley lab led by Professor Ion Stoica. vLLM has similarly undertaken this commercial transition, with industry reports suggesting the nascent enterprise is in discussions to secure over $160 million in funding at a valuation of approximately $1 billion. While vLLM co-founder Simon Mo disputed the accuracy of the details surrounding this funding round to TechCrunch, industry sources indicate Andreessen Horowitz is spearheading the investment. Both SGLang and vLLM have garnered substantial traction, with numerous major technology firms currently deploying their inference operations utilizing vLLM, and SGLang itself having gained considerable popularity throughout the past half-year, as noted by Brittany Walker, a general partner at CRV.

The surge in funding for startups providing inference infrastructure for developers highlights the enduring criticality of the AI inference layer. This segment of the AI market is experiencing an explosion of investment, reflecting the immense economic value at stake. Recent weeks have seen other significant funding announcements: Baseten recently closed a $300 million funding round, achieving a formidable $5 billion valuation, as reported by The Wall Street Journal. This development trails a comparable action by competitor Fireworks AI, which amassed $250 million last October, reaching a $4 billion valuation. These figures underscore the fervent investor interest in companies that can deliver tangible improvements in AI efficiency and cost reduction. The intense competition and high valuations in this niche segment demonstrate that the market recognizes the profound impact these optimization tools will have on the widespread adoption and scalability of AI technologies.

The Economics and Impact of Efficient AI

The economic implications of efficient AI inference are profound and far-reaching. As AI models become integral to countless business operations—from customer service chatbots and personalized recommendations to advanced analytics and content generation—the operational costs associated with running these models at scale can quickly become prohibitive. Inference optimization tools offer a direct solution by significantly reducing the computational resources required. This means businesses can either reduce their cloud computing expenditures or serve a much larger user base with the same budget. The immediate savings can be enormous, liberating capital that can be reinvested into further AI development, research, or other business growth initiatives.

Beyond direct cost savings, enhanced inference efficiency has broader market and social impacts. Faster AI models translate to more responsive applications, improving user experience across various platforms. This increased responsiveness enables new use cases that were previously unfeasible due to latency constraints, such as real-time language translation, instantaneous creative content generation, or sophisticated autonomous systems. By making AI more affordable and performant, these technologies also contribute to the democratization of advanced AI, allowing smaller businesses and developers to access capabilities once reserved for large corporations with massive budgets. This fosters innovation across industries and accelerates the integration of AI into everyday life, potentially leading to new services, products, and even job creation in the burgeoning AI economy.

Looking Ahead: Challenges and Opportunities

The rapid commercialization of projects like SGLang into RadixArk signals a pivotal moment in the AI infrastructure landscape. The demand for efficient AI inference is expected to continue its upward trajectory as AI permeates more sectors and applications. However, this burgeoning market also presents its share of challenges. The intense competition from well-funded rivals like vLLM, Baseten, and Fireworks AI means RadixArk must continuously innovate and differentiate its offerings. Maintaining a delicate balance between fostering the open-source SGLang community and developing proprietary commercial services will be crucial for long-term success. The loyalty and contributions of the open-source community are invaluable, but monetization strategies must be carefully managed to avoid alienating these crucial supporters.

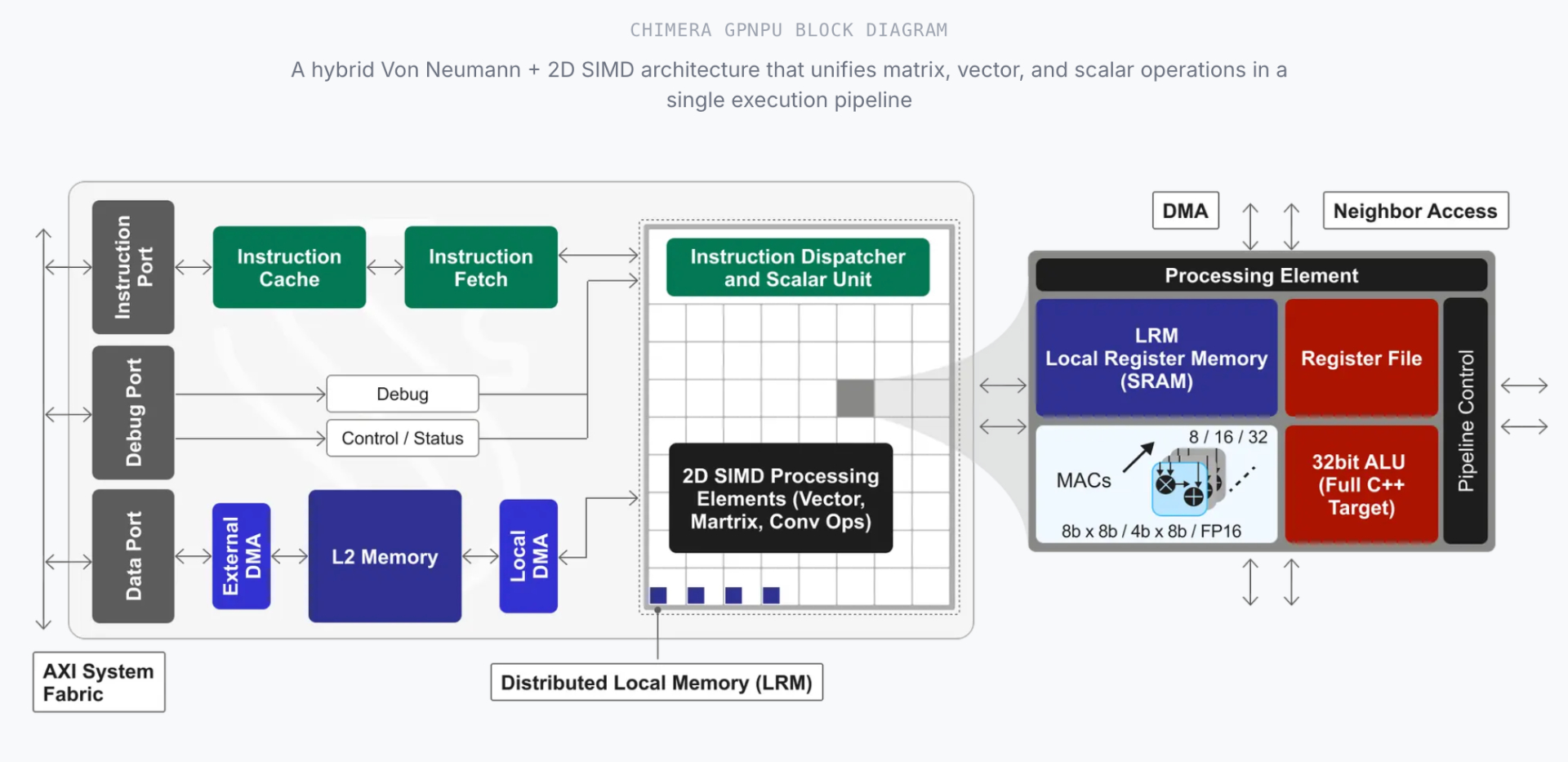

Technological shifts also pose a constant challenge. The field of AI is evolving at an unprecedented pace, with new model architectures, hardware accelerators (like custom AI chips from Nvidia, Google, and others), and deployment strategies emerging regularly. RadixArk and its peers must remain agile, adapting their optimization techniques to new paradigms to stay relevant. Despite these hurdles, the opportunities are immense. The global AI market is projected to grow substantially, with inference representing an ever-larger portion of the overall expenditure. Companies that can effectively unlock greater efficiency will capture significant market share and play a fundamental role in shaping the future of AI deployment. The substantial investments flowing into this sector are a testament to the strategic importance of inference optimization, positioning RadixArk and similar ventures at the forefront of the ongoing AI revolution.