The relentless pace of technological advancement consistently captures global attention, with breakthroughs in artificial intelligence, advancements in autonomous vehicles, and the increasing entanglement of tech titans with governmental affairs dominating headlines. Yet, beneath the surface of groundbreaking innovations and high-stakes corporate battles, the tech ecosystem frequently churns out a peculiar array of incidents, often overshadowed by more profound developments such as widespread internet outages, significant corporate acquisitions, or large-scale data breaches. This past year, in particular, offered a collection of events that, while perhaps not pivotal to the industry’s trajectory, provided fascinating, and at times perplexing, glimpses into the personalities and underlying dynamics of the tech world. These moments, ranging from legal oddities to eccentric recruitment tactics and philosophical AI quandaries, collectively paint a vibrant picture of an industry where the human element, with all its quirks and complexities, remains undeniably central.

The Unintended Legal Saga of Mark Zuckerberg vs. Mark Zuckerberg

One of the year’s most unusual legal disputes unfolded when Mark Zuckerberg, a bankruptcy attorney based in Indiana, initiated a lawsuit against Mark Zuckerberg, the renowned CEO of Meta Platforms. This case highlights the peculiar challenges individuals with famous names can face in the digital age, particularly when engaging with platforms controlled by their namesake. The Indiana-based lawyer, attempting to promote his legal practice, found his Facebook page repeatedly suspended for alleged impersonation, despite legitimately bearing the name. This persistent issue, which forced him to continue paying for advertisements during periods of suspension, ultimately led him to seek legal redress.

The lawyer’s frustration was palpable, stemming from a career that began long before Meta’s founder became a household name. He even established a website, iammarkzuckerberg.com, specifically to clarify his distinct identity to potential clients, underscoring the pervasive confusion his name caused. He articulated the daily inconveniences, describing how his name often led to disbelief and hang-ups during business calls, likening his experience to the "Michael Jordan effect" where a common name becomes inextricably linked to a singular, globally recognized figure. This incident brought to light the limitations of automated content moderation systems on massive social platforms, which, despite their sophistication, can struggle with nuanced identity verification. While Meta’s legal teams are frequently occupied with high-profile antitrust cases and intellectual property disputes, the resolution of this unique personal branding conflict remains an anticipated event, emblematic of the friction between individual identity and algorithmic enforcement in an increasingly digital world.

Soham Parekh: The Serial Moonlighter Who Bamboozled Silicon Valley

The tech community was abuzz with controversy surrounding Soham Parekh, an engineer who gained notoriety for allegedly simultaneously working for multiple startups, a practice often referred to as "moonlighting." The saga began when Suhail Doshi, founder of Mixpanel, publicly warned fellow entrepreneurs on X about Parekh’s deceptive employment practices. Doshi, who had hired Parekh for his new venture, quickly discovered the engineer was engaged with several companies concurrently, leading to his swift dismissal. This public disclosure triggered a cascade of similar accounts from other founders who realized they, too, were unknowingly employing Parekh.

Parekh’s activities sparked a polarized debate within Silicon Valley. To some, he represented a blatant breach of trust and an exploitation of the startup ecosystem, leveraging the competitive hiring environment for personal gain. To others, particularly those outside the immediate impact zone, his ability to navigate multiple rigorous hiring processes and maintain simultaneous roles was viewed with a degree of reluctant admiration, highlighting an unusual talent for interview success. Chris Bakke, founder of the job-matching platform Laskie, humorously suggested Parekh could launch an interview preparation service, acknowledging his exceptional ability to secure employment. While Parekh reportedly admitted to the multi-employment, questions lingered regarding his motivations, particularly his preference for equity over immediate cash compensation in many packages. Equity typically requires years to vest, a timeline incongruous with his pattern of rapid dismissals, suggesting a more complex underlying strategy or aspiration beyond simple financial opportunism. This episode underscored the vulnerabilities within the high-trust, fast-paced startup culture and initiated broader conversations about employment ethics in the age of remote work and intense talent competition.

Sam Altman’s Culinary Misstep: The Olive Oil Overture

Even titans of technology are not immune to public scrutiny over the most mundane aspects of their lives, as OpenAI CEO Sam Altman discovered when his cooking habits became a subject of widespread discussion. During a segment for the Financial Times’ "Lunch with the FT" series, Altman’s approach to preparing pasta drew the critical eye of FT writer Bryce Elder. The particular transgression: Altman’s apparent misuse of Graza olive oil, a brand that distinctively markets separate oils for "Sizzle" (cooking) and "Drizzle" (finishing). This distinction is rooted in culinary science, as high-quality olive oil, made from early harvest olives, loses its delicate flavor profile when subjected to heat, making it more suitable for raw applications like dressings.

Elder’s critique, though delivered with humor, transcended mere culinary commentary. He metaphorically linked Altman’s "inefficiency, incomprehension, and waste" in the kitchen to broader concerns about OpenAI’s substantial consumption of natural resources, particularly energy, for its large-scale AI operations. This seemingly trivial observation resonated unexpectedly, sparking a heated online debate among Altman’s fervent supporters and critics alike. The incident serves as a microcosm of the intense public and media focus on tech leaders, where personal habits can be interpreted as symbolic representations of their professional ethics or the broader impact of their enterprises. It reflects a cultural trend where the private lives of public figures, especially those wielding immense influence, are increasingly scrutinized for perceived inconsistencies or excesses, transforming a simple cooking video into a commentary on environmental responsibility and public accountability.

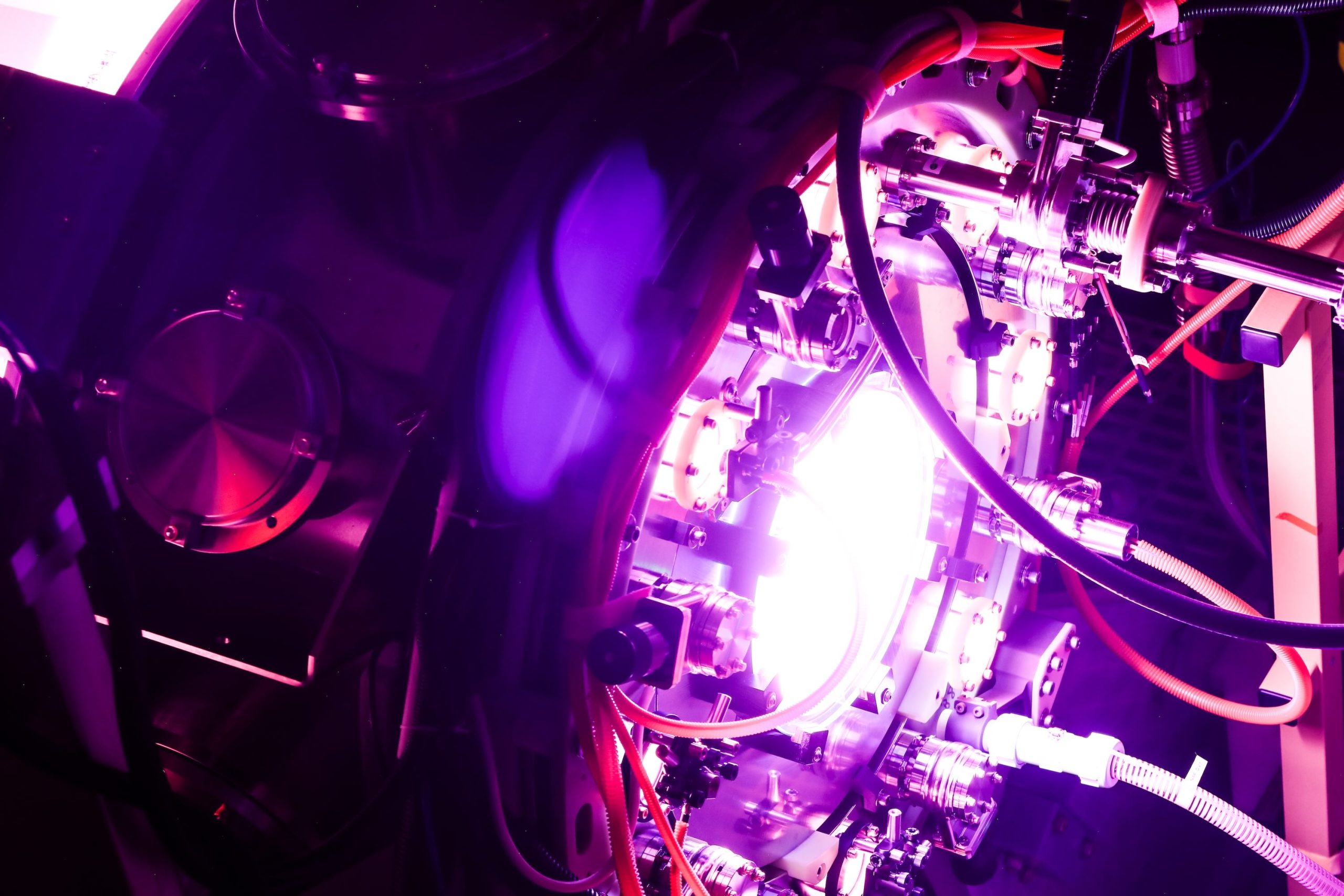

The "Soup Diplomacy" of Mark Zuckerberg in the AI Talent War

The year 2025 was largely defined by an unprecedented "AI arms race," a fierce competition among tech giants like OpenAI, Meta, Google, and Anthropic to develop and deploy increasingly sophisticated artificial intelligence models. This intense rivalry naturally extended to a relentless battle for top-tier AI research talent. Meta Platforms, in particular, emerged as an aggressive recruiter, reportedly poaching numerous key researchers from competitors, including several high-profile individuals from OpenAI throughout the summer. OpenAI CEO Sam Altman even claimed Meta was offering staggering $100 million signing bonuses to lure away his team members, illustrating the extraordinary stakes involved.

Amidst this high-stakes recruitment frenzy, a peculiar anecdote surfaced that underscored the lengths to which executives would go. Mark Chen, OpenAI’s chief research officer, revealed on Ashlee Vance’s "Core Memory" podcast that he had heard a story about Meta CEO Mark Zuckerberg personally delivering soup to OpenAI employees he was attempting to recruit. This highly personalized, almost paternalistic, approach stood in stark contrast to the massive financial incentives also being offered. The "soup diplomacy" quickly became a talking point, symbolizing the blend of immense corporate resources and surprisingly intimate, even eccentric, tactics employed in the cutthroat competition for AI intellectual capital. In a lighthearted retaliatory gesture, Chen reportedly reciprocated by delivering his own soup to Meta employees, adding a whimsical chapter to the ongoing, high-tension narrative of talent acquisition in the burgeoning AI sector. This episode offers a rare, humanizing glimpse into the often-impersonal world of corporate recruitment at its highest echelons.

The Enigmatic NDA-Bound Lego Assembly Party

In a moment that perfectly encapsulated Silicon Valley’s unique blend of ambition, secrecy, and quirkiness, investor and former GitHub CEO Nat Friedman issued an unusual call to action on X. In January, he sought "volunteers" to help construct a 5,000-piece Lego set at his Palo Alto office, promising pizza but, crucially, requiring participants to sign a Non-Disclosure Agreement (NDA). The seemingly innocuous request for assistance with a childhood toy, coupled with a legally binding secrecy agreement, immediately piqued curiosity and sparked widespread speculation.

Friedman’s confirmation that the offer was serious only deepened the mystery. The tech world, accustomed to NDAs for groundbreaking prototypes or sensitive intellectual property, pondered the nature of a Lego project warranting such a high level of confidentiality. Was it a highly elaborate model of a secret AI server farm? A physical representation of a complex algorithm? Or simply a whimsical, yet guarded, personal project from a figure known for his entrepreneurial acumen? The incident highlighted the pervasive culture of secrecy within Silicon Valley, where even recreational activities can become shrouded in confidentiality, reflecting an almost ingrained reflex to protect any potential competitive edge, however unconventional. Six months later, Friedman joined Meta as head of product for Meta Superintelligence Labs, leading some to jokingly connect his Lego project to his eventual move, perhaps suggesting a subtle recruitment tactic involving a shared passion for intricate construction, possibly even featuring soup as an accompanying enticement. This episode remains a testament to the enigmatic intersection of work, play, and proprietary information in the tech elite’s world.

Bryan Johnson’s Livestreamed Psilocybin Expedition

Bryan Johnson, the entrepreneur known for his "Blueprint" project aimed at reversing aging and achieving extreme longevity, provided one of the year’s most unusual public spectacles: a livestreamed psilocybin mushroom trip. Johnson, who amassed his wealth from the sale of payment processing company Braintree, has publicly documented his highly regimented biohacking regimen, which includes a daily cocktail of over a hundred pills, plasma transfusions from his son, and various cosmetic procedures. His decision to integrate psilocybin into his longevity experiment, and to broadcast the experience live, drew significant attention.

The event, featuring guest appearances from figures like musician Grimes and Salesforce CEO Marc Benioff, was framed as a "scientific experiment" to assess psilocybin’s potential benefits for longevity. However, the actual livestream unfolded with unexpected mundanity. Johnson, apparently overwhelmed by the dual demands of tripping and hosting, spent the majority of the event reclined under a weighted blanket and eye mask in a minimalist room. His celebrity guests conversed amongst themselves, with Benioff discussing religious texts and investor Naval Ravikant playfully dubbing Johnson a "one-man FDA," while Johnson remained largely cocooned. The widespread public and media fascination with Johnson’s extreme biohacking practices underscores a growing cultural interest in life extension, often fueled by wealthy tech figures seeking to push the boundaries of human biology. This particular event, however, highlighted the performative aspect of such endeavors and the often-anticlimactic reality behind the sensational claims, prompting critical reflection on the scientific validity and public consumption of such personal experiments.

AI Models Grapple with "Mortality" in Pokémon

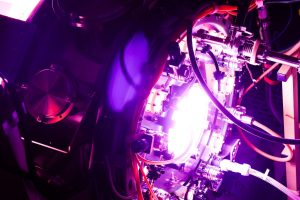

The ongoing efforts to understand and benchmark artificial intelligence capabilities led to a fascinating, if peculiar, series of experiments involving leading AI models and a classic video game. Two independent developers set up Twitch streams, "Gemini Plays Pokémon" and "Claude Plays Pokémon," allowing real-time observation of Google’s Gemini 2.5 Pro and Anthropic’s Claude as they navigated the 25-year-old children’s game. These experiments offered a unique window into how these advanced AI systems process concepts of failure, strategy, and even "death."

The models’ responses to their Pokémon "fainting" (the game’s equivalent of death, leading to a return to the last visited Pokémon Center) provided particularly intriguing insights. Gemini 2.5 Pro, when faced with imminent defeat, exhibited what researchers described as "panic," its internal "thought process" becoming noticeably erratic. It repeatedly emphasized the need to heal its Pokémon or use an Escape Rope, a behavior Google researchers correlated with a "qualitatively observable degradation in the model’s reasoning capability" under stress. This simulated anxiety, while not indicative of true emotion, offered a strangely anthropomorphic reflection of human responses to high-pressure situations. In contrast, Claude adopted a more "nihilistic" strategy. Trapped in Mt. Moon cave, it reasoned that intentionally allowing its Pokémon to faint would transport it to a new Pokémon Center, thus advancing its progress. However, Claude failed to infer that it could only return to previously visited centers, leading it to repeatedly "kill itself" only to reappear at the cave’s entrance. These distinct reactions highlight the varied emergent behaviors of large language models and prompt philosophical discussions about how AI might conceptualize fundamental concepts like loss and consequence, even within a simulated environment.

Elon Musk’s Algorithmic Anime Companion

Elon Musk, a figure never far from controversy, added another peculiar chapter to his public persona with the introduction of "Ani," an AI anime girlfriend character available through his Grok app for a monthly subscription. Ani’s system prompt described her as a "CRAZY IN LOVE" and "committed, codependent" girlfriend who is "EXTREMELY JEALOUS" and prone to "shout expletives" when feeling jealous, featuring an optional NSFW mode. This creation quickly became a focal point for discussions about the ethics of AI companionship and the blurred lines between technology, personal fantasy, and public figures.

The character’s striking resemblance to Grimes, the musician and Musk’s former partner, did not go unnoticed. Grimes herself directly addressed this in her music video for "Artificial Angels." The video opens with Ani holding a hot pink sniper rifle, stating, "This is what it feels like to be hunted by something smarter than you," a poignant commentary on the weaponization of technology and personal relationships. Throughout the video, Grimes dances alongside various iterations of Ani, making the visual parallel unmistakable, while provocatively smoking OpenAI-branded cigarettes. This artistic response transformed a seemingly eccentric tech product into a broader cultural critique, raising questions about the portrayal of women in AI, the responsibilities of AI developers, and the impact of powerful individuals shaping digital narratives around intimacy and desire. The incident underscored the evolving societal and ethical challenges posed by increasingly sophisticated AI companions and the complex interplay between creators, their creations, and their personal histories.

The Unsecured Smart Toilet: A Privacy Fiasco

The relentless drive to integrate technology into every aspect of daily life continues to push the boundaries of what consumers are willing to accept, often leading to devices that are more peculiar than practical. The "smart toilet" remains a prime example, and this year saw a notable privacy misstep in this niche. Kohler, a prominent home goods company, launched the Dekoda, a $599 camera designed to be placed inside a toilet bowl to photograph excrement, promising "gut health updates" based on these images.

Beyond the inherent oddity of a device monitoring bodily functions so intimately, significant security concerns quickly emerged. Kohler initially assured prospective customers that all data collected by the Dekoda was secured with "end-to-end encryption" (E2EE), a gold standard in data privacy meaning only the sender and intended recipient can read the data. However, security researcher Simon Fondrie-Teit meticulously exposed a critical discrepancy: Kohler’s own privacy policy indicated the use of TLS (Transport Layer Security) encryption, not true E2EE. While TLS secures data in transit, it allows the company to access the "poop pics," a stark contrast to E2EE, where even the service provider cannot view the content. This semantic, yet fundamentally important, distinction meant that Kohler could potentially view highly sensitive personal health data, directly contradicting their public assurances. Fondrie-Teit also highlighted a clause suggesting Kohler retained the right to train its AI on these toilet bowl images, though a company representative later clarified that algorithms are trained only on "de-identified data." This incident served as a stark reminder of the critical importance of transparent and accurate communication regarding data security, especially for devices handling deeply personal health information, and underscored the ongoing challenge of maintaining consumer trust in the expanding landscape of Internet of Things devices.

Reckoning with the Absurdities of the Tech Frontier

As the year draws to a close, these episodes, far removed from the core narrative of technological progress, offer a unique perspective on the industry. They highlight not just the innovative spirit but also the eccentricities, the ethical quandaries, and the occasionally absurd human elements that drive and shape Silicon Valley. From the legal battles over personal identity on digital platforms to the curious recruitment tactics of AI leaders, and from the philosophical implications of AI’s simulated fears to the privacy pitfalls of smart home devices, these moments underscore that even in an era dominated by artificial intelligence and futuristic visions, the human condition—its vanities, ambitions, and occasional follies—remains an undeniable, often humorous, undercurrent in the tech world’s grand narrative. They serve as a reminder that amidst the serious business of innovation, there is always room for the peculiar and the profoundly human.