OpenAI, a leading force in artificial intelligence development, has unveiled an audacious timeline for the evolution of its deep learning systems, projecting the advent of AI capable of independent scientific research by 2028. This ambitious vision, shared by CEO Sam Altman and Chief Scientist Jakub Pachocki during a recent livestream, outlines a rapid progression from current capabilities to systems that could fundamentally alter the landscape of discovery and innovation. The announcement coincided with a significant corporate restructuring, positioning OpenAI to secure the immense capital required to fuel these advancements while ostensibly adhering to its founding mission.

Defining the Future of Discovery: AI as a Research Partner

At the core of OpenAI’s near-term projections is the concept of an "intern-level research assistant" by September 2026, culminating in a "legitimate AI researcher" two years later. Pachocki clarified that this "AI researcher" is not a human individual but rather a sophisticated system "capable of autonomously delivering on larger research projects." This distinction is crucial, signaling a shift from AI as a mere tool for human researchers to an active, independent agent in the scientific process.

The vision extends beyond automating repetitive tasks or data analysis. Instead, it envisions AI systems formulating hypotheses, designing experiments, executing research methodologies, and interpreting results, potentially even publishing findings – all with minimal human intervention. Such an evolution would mark a profound paradigm shift, potentially accelerating breakthroughs across various scientific disciplines at an unprecedented pace. The implications for fields like medicine, materials science, and fundamental physics are immense, promising faster drug discovery, novel material synthesis, and deeper insights into the universe’s workings.

The Superintelligence Frontier: A Decade Away?

Beyond the immediate goal of autonomous AI researchers, Pachocki made an even more striking prediction: the emergence of "superintelligence" within the next decade. He defined superintelligence as systems "smarter than humans across a large number of critical actions," a concept that has long captivated and concerned researchers and the public alike. This forecast places OpenAI at the forefront of a global discussion about the ultimate capabilities and potential ramifications of advanced AI.

The pursuit of superintelligence introduces complex ethical and philosophical questions. While proponents envision a future where AI tackles humanity’s most intractable problems—from climate change to disease eradication—critics and safety advocates raise concerns about control, alignment, and the potential for unintended consequences. Ensuring that superintelligent systems operate in alignment with human values and goals is considered by many to be the paramount challenge of our era. OpenAI has consistently emphasized its commitment to responsible AI development, indicating that safety and alignment research will be integral to its trajectory toward these advanced systems. This dual focus on rapid advancement and robust safety measures highlights the inherent tensions and profound responsibilities accompanying such a transformative quest.

Unlocking Potential: The Dual Engines of Progress

OpenAI’s strategy to achieve these ambitious goals rests on two foundational pillars: relentless algorithmic innovation and a dramatic scaling of "test time compute." Algorithmic innovation involves developing more efficient and powerful deep learning architectures, training methodologies, and learning paradigms that enable AI models to grasp complexity and generalize knowledge more effectively. This continuous refinement of the underlying intelligence mechanisms is critical for building systems capable of sophisticated reasoning and problem-solving.

Equally vital is the concept of "test time compute," which Pachocki described as essentially how long models spend thinking about problems. Current models, he noted, can manage tasks with a roughly five-hour time horizon and have demonstrated capabilities matching top human performers in challenging competitions such as the International Mathematics Olympiad. However, to achieve truly groundbreaking scientific discoveries, the next generation of AI will require vastly more computational resources dedicated to individual problems. Pachocki suggested that for major scientific breakthroughs, "it would be worth dedicating entire data centers’ worth of computing power to a single problem." This implies a future where AI systems might deliberate on a single complex challenge for days, weeks, or even months, drawing upon immense processing power to explore vast solution spaces and identify novel insights.

This approach signifies a departure from merely optimizing for speed. Instead, it prioritates depth of thought and thoroughness of exploration, leveraging the sheer scale of computational power to mimic and surpass human cognitive processes in specific domains. The ability to "think" for extended periods, unburdened by human limitations, could unlock entirely new avenues of scientific inquiry and problem-solving.

A New Blueprint: OpenAI’s Corporate Transformation

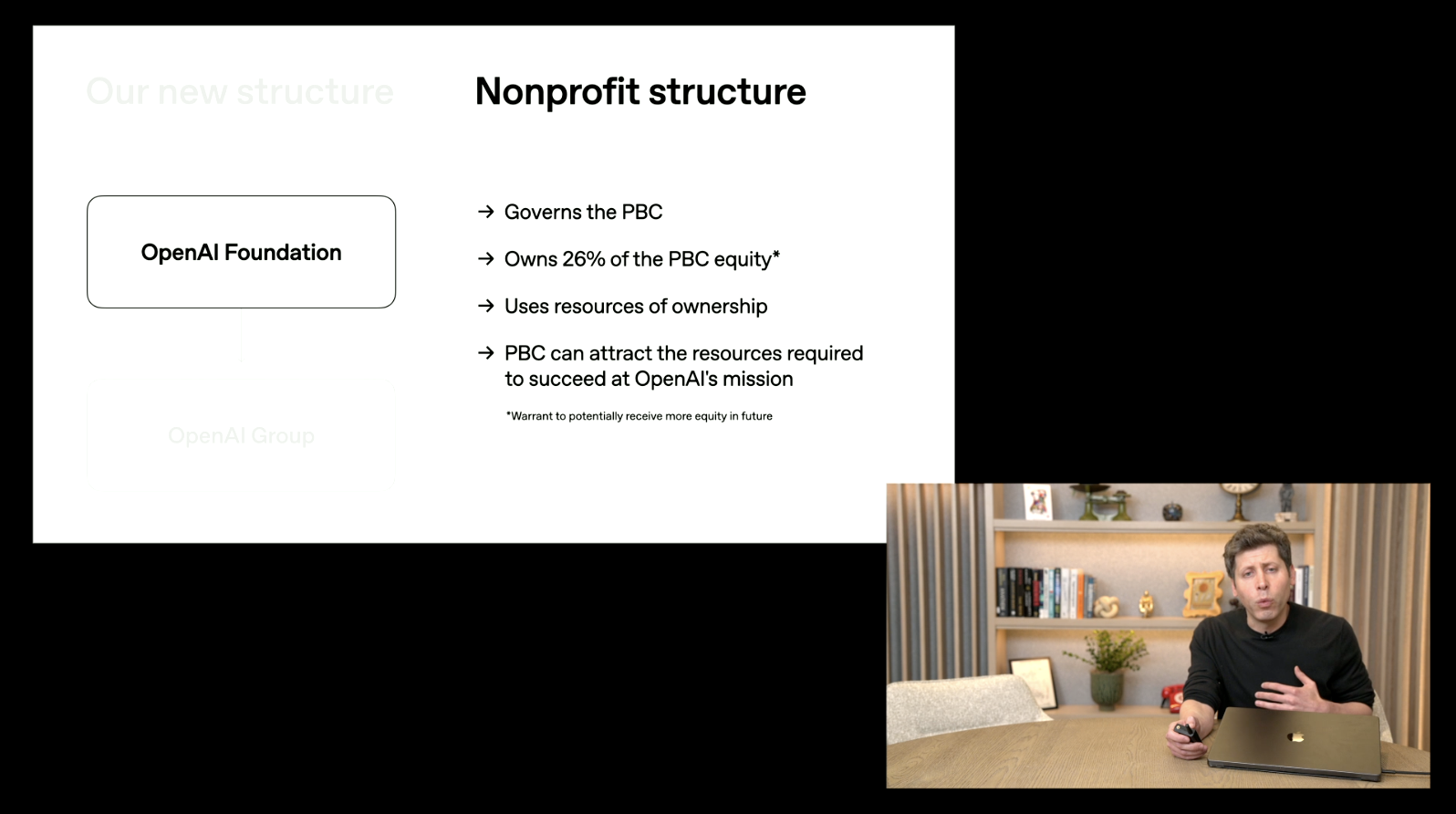

The announcement of these ambitious AI timelines was made on the same day OpenAI finalized its transition to a public benefit corporation structure. This significant corporate evolution marks a pivotal moment in the organization’s journey, moving away from its original non-profit roots while still aiming to uphold its core mission.

Historically, OpenAI began as a non-profit research laboratory in 2015, founded with the explicit goal of advancing digital intelligence in a way that benefits all of humanity, free from commercial pressures. However, the escalating computational and talent costs associated with cutting-edge AI research soon necessitated a more flexible financial model. In 2019, OpenAI introduced a "capped-profit" subsidiary, allowing it to raise substantial capital from investors while retaining its non-profit parent as the majority owner and ethical guardian. This unique structure aimed to balance the need for vast resources with a commitment to safety and public benefit.

The latest restructuring solidifies this hybrid model, with the non-profit OpenAI Foundation now owning a substantial 26% stake in the for-profit entity. Crucially, the non-profit arm retains significant governance over the research direction, ensuring that the pursuit of advanced AI remains aligned with its original mission. Furthermore, the non-profit has a monumental $25 billion commitment specifically earmarked for leveraging AI to cure diseases, alongside managing broader AI research and safety initiatives. This framework is designed to release the for-profit arm from the limitations traditionally associated with a pure non-profit charter, thereby opening up unprecedented opportunities for capital raising and infrastructure investment, all while theoretically safeguarding the responsible development of AI.

Powering the Future: A Trillion-Dollar Infrastructure Commitment

The sheer scale of OpenAI’s ambitions necessitates an equally monumental investment in infrastructure. Sam Altman revealed that the company has committed to building out 30 gigawatts of computing infrastructure, a staggering endeavor that carries an estimated $1.4 trillion financial obligation over the next few years. To put this figure into perspective, 30 gigawatts is roughly equivalent to the total electricity generation capacity of several small countries or a significant fraction of the entire U.S. data center capacity. This commitment underscores the company’s belief that future AI advancements are inextricably linked to the availability of massive computational power.

This colossal investment is expected to manifest in projects like the "Stargate" initiative, reportedly involving the construction of five new hyperscale data centers in collaboration with partners such as Oracle and SoftBank. Such an undertaking will have profound ripple effects across various industries. The demand for advanced semiconductor chips will skyrocket, placing immense pressure on manufacturers and potentially reshaping global supply chains. The energy sector will face unprecedented requirements for clean, sustainable power sources to fuel these vast computing complexes. Furthermore, the construction and operation of these facilities will create new job markets, driving innovation in cooling technologies, network infrastructure, and data center management. This isn’t just an investment in AI; it’s an investment in the foundational infrastructure of a future powered by advanced intelligence, signaling a potential new era of technological arms race for computational supremacy.

Navigating the New Era: Opportunities and Challenges

The prospect of autonomous AI researchers and superintelligence within a decade presents both unparalleled opportunities and formidable challenges. On the positive side, AI-driven scientific discovery could dramatically accelerate progress in addressing humanity’s most pressing issues. Imagine AI systems sifting through vast biological datasets to identify cures for intractable diseases, designing novel materials with unprecedented properties, or optimizing renewable energy systems to combat climate change. The pace of technological innovation across medicine, physics, and general technology development could reach exponential levels, leading to a golden age of scientific breakthroughs.

However, the societal and cultural impacts of such advanced AI cannot be overstated. The emergence of autonomous AI researchers could significantly alter the landscape of human employment, particularly for those in knowledge-based professions. While some roles might be augmented, others could face displacement, necessitating proactive strategies for workforce adaptation and economic restructuring. Ethical considerations, such as the potential for bias in AI-generated research, the challenge of maintaining human oversight and control, and the "black box" nature of complex deep learning models, will become increasingly critical. Ensuring that AI’s decision-making processes are transparent, fair, and aligned with human values will be a constant, evolving challenge.

Neutral analytical commentary suggests that while OpenAI’s vision is ambitious, it aligns with a broader industry trend toward increasingly capable AI systems. However, the path to superintelligence is fraught with technical hurdles, including limitations in current AI’s ability to reason abstractly, understand common sense, and possess true creativity. The timeline presented by OpenAI is aggressive, even by industry standards, and will require sustained breakthroughs not just in computation but also in foundational AI theory. The emphasis on responsible AI development and alignment research is crucial, as the stakes involved in building systems that could surpass human intelligence are perhaps the highest humanity has ever faced.

In conclusion, OpenAI’s latest pronouncements outline a future where artificial intelligence transitions from a powerful tool to an autonomous partner in scientific discovery, potentially culminating in superintelligence within the decade. This transformative vision is underpinned by a strategic corporate restructuring and a staggering commitment to computational infrastructure. As humanity stands on the precipice of this new era, the blend of unprecedented opportunity and profound responsibility demands careful navigation, ensuring that the relentless pursuit of advanced intelligence ultimately serves the betterment of all.