Lambda, a prominent provider of specialized artificial intelligence data center infrastructure, has announced a monumental $1.5 billion funding round. This substantial capital infusion, spearheaded by the formidable investment firm TWG Global, underscores the escalating demand for high-performance computing resources crucial for training and deploying advanced AI models. The news follows closely on the heels of a significant multi-billion dollar agreement between Lambda and Microsoft, further cementing the company’s position as a critical enabler in the burgeoning AI ecosystem.

The New Frontier of AI Compute

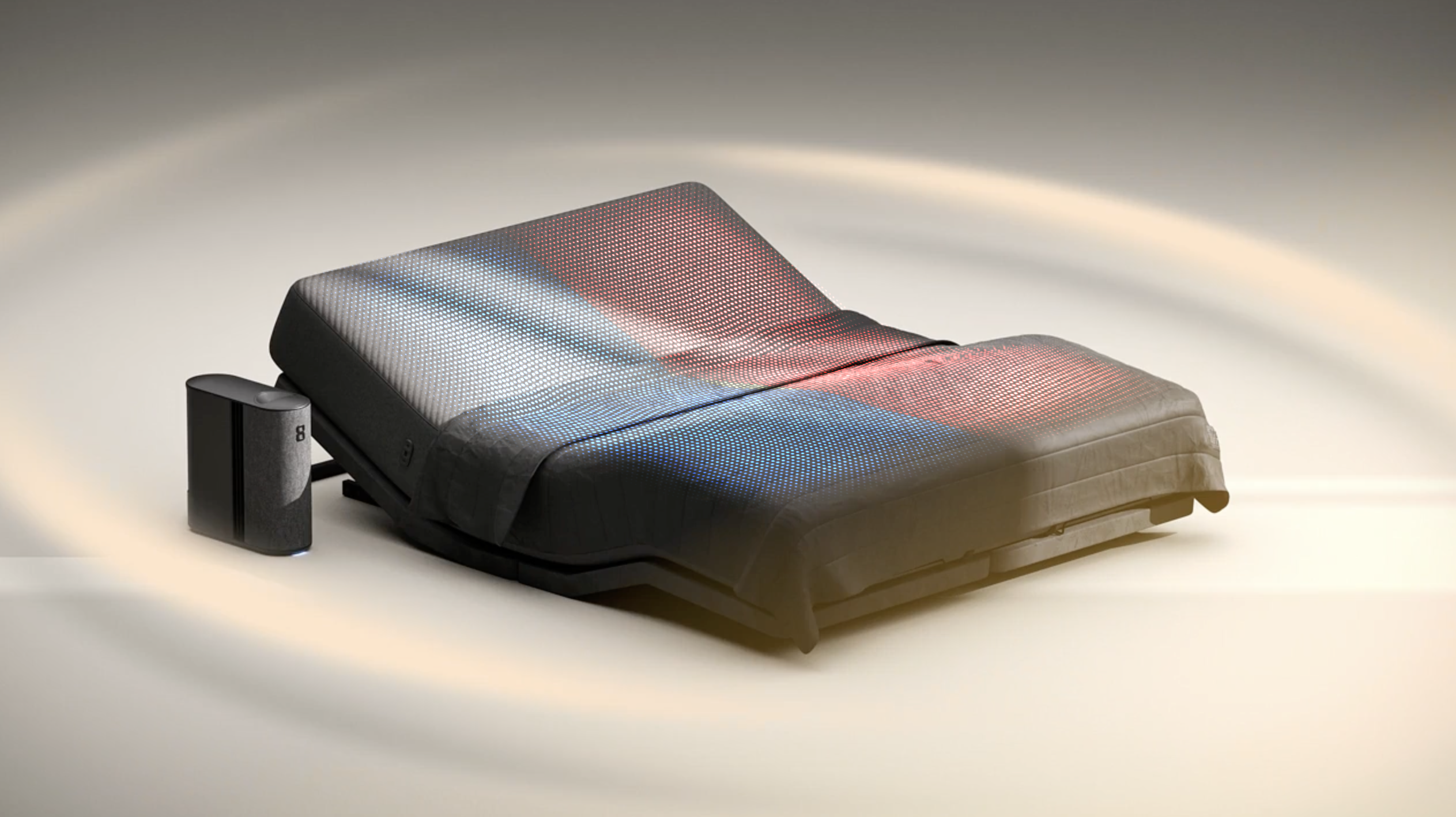

The artificial intelligence revolution, particularly the rapid advancements in generative AI, has created an unprecedented demand for specialized computing infrastructure. Unlike traditional cloud workloads, AI model training requires immense parallel processing power, primarily delivered by graphics processing units (GPUs). This has led to the emergence of companies like Lambda, which focus specifically on building and operating "AI factories" – data centers optimized with thousands of cutting-edge GPUs to serve the insatiable needs of AI developers and large enterprises.

TWG Global, the lead investor in this round, is a relatively new but financially powerful entity, boasting approximately $40 billion in assets. It was co-founded by two influential billionaires: Thomas Tull, renowned for his tenure as the former owner of Legendary Entertainment, and Mark Walter, the founder and CEO of Guggenheim Partners. TWG Global’s portfolio is diverse, encompassing Walter’s stakes in high-profile assets such as the Los Angeles Lakers basketball team and the nascent Cadillac F1 racing team. Critically, the firm has established a dedicated $15 billion fund specifically earmarked for AI investments, which is anchored by Abu Dhabi’s Mubadala Capital, signaling a long-term strategic commitment to the sector. This move highlights a broader trend where significant capital from traditional finance and entertainment sectors is increasingly flowing into deep technology, recognizing AI’s transformative potential across all industries. Earlier this year, TWG Global also announced a partnership with Elon Musk’s xAI and enterprise software giant Palantir, aimed at developing and deploying AI agents for various enterprise applications, demonstrating their proactive engagement in the AI landscape.

A Strategic Capital Infusion

Lambda’s latest funding round far exceeds earlier market whispers, which had projected a raise in the hundreds of millions, potentially valuing the company north of $4 billion. While Lambda refrained from commenting on its current valuation, the sheer scale of the $1.5 billion raise strongly suggests a substantial increase from its previous valuation. In February, the company secured a $480 million Series D round, which PitchBook estimated placed its valuation at approximately $2.5 billion. The trajectory of these funding rounds illustrates not only Lambda’s rapid growth but also the intensifying investor confidence in the critical role of AI infrastructure providers. This significant capital injection will likely be channeled into expanding Lambda’s existing data center footprint, procuring more high-demand GPUs, and enhancing its specialized cooling and power infrastructure necessary to support increasingly dense and power-intensive AI hardware.

The capital also empowers Lambda to aggressively compete for talent in a highly specialized market, ensuring it can attract the engineers and operational experts needed to manage and scale its complex AI environments. Furthermore, it provides the financial runway for research and development into next-generation AI hardware and software integrations, potentially leading to proprietary optimizations that can give it a competitive edge.

The High Stakes of AI Infrastructure

The AI infrastructure market is characterized by intense competition and strategic maneuvers, particularly among hyperscale cloud providers and specialized GPU-as-a-service companies. Lambda operates in direct competition with other specialized providers such as CoreWeave, both vying for contracts with major technology firms that require massive AI compute power. However, Lambda also strategically positions itself as a supplier of "AI factories" to hyperscale cloud providers, indicating a flexible business model that can both compete and collaborate within the broader cloud ecosystem.

A key development preceding this funding round was Lambda’s announcement earlier this month of a multi-billion dollar agreement to supply Microsoft with AI infrastructure. This deal involves providing tens of thousands of Nvidia GPUs, which are the backbone of modern AI training. Nvidia itself is an existing investor in Lambda, highlighting its strategic interest in ensuring its dominant GPU technology is widely adopted and accessible.

This partnership with Microsoft is particularly notable given recent dynamics in the AI infrastructure space. Microsoft had previously engaged with CoreWeave, which served as its largest customer in 2024, with Microsoft purchasing approximately $1 billion worth of services. However, a significant shift occurred in March when OpenAI, a key Microsoft partner and a leader in generative AI, entered into a substantial $12 billion deal with CoreWeave. This move by OpenAI, potentially aimed at diversifying its compute resources or securing dedicated capacity, necessitated Microsoft to further expand and diversify its own AI infrastructure partnerships. Lambda’s new agreement with Microsoft can be seen as a strategic response, ensuring Microsoft has robust and redundant access to the compute power required for its internal AI initiatives, Azure AI services, and future collaborations.

Navigating the Competitive Landscape

The competition for AI compute resources is not merely a financial contest; it’s a strategic battle for technological leadership. Companies like Lambda and CoreWeave are racing to acquire the latest Nvidia GPUs, secure prime locations for data centers, and develop efficient cooling and power solutions to handle the immense energy demands of AI workloads. The ability to quickly deploy and scale these "AI factories" is a significant differentiator.

Hyperscale cloud providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure are also heavily investing in their own AI infrastructure. However, specialized providers often claim advantages in terms of customizability, direct access to raw GPU power, and potentially more cost-effective solutions for specific, highly intensive AI workloads. This creates a fascinating dynamic where hyperscalers might leverage specialized partners to augment their own offerings or to gain access to specific hardware configurations more quickly than they could build out themselves.

The involvement of prominent investors like TWG Global, backed by figures like Thomas Tull and Mark Walter, signifies the mainstreaming of AI infrastructure as a prime investment opportunity. Their understanding of long-term market trends and ability to deploy massive capital can accelerate the growth of these specialized firms, potentially shaping the future landscape of AI development.

The Power Behind the Models: Market Dynamics

The market for AI infrastructure is driven by several powerful forces. Firstly, the exponential growth in the size and complexity of AI models, such as large language models (LLMs) and diffusion models, directly translates into a proportional increase in the computational power required for their training and inference. Secondly, the scarcity of high-end GPUs, particularly those from Nvidia, creates a bottleneck that specialized providers aim to alleviate by making substantial, early investments. This ensures they have the hardware to meet demand, which can be a competitive advantage when other players face supply chain constraints.

Thirdly, the increasing adoption of AI across various industries—from healthcare and finance to manufacturing and entertainment—is generating a diverse range of AI workloads, each with specific infrastructure requirements. Specialized data centers can offer tailored environments, software stacks, and support services that are optimized for these unique demands, making them attractive to a broad spectrum of clients.

Beyond the immediate financial and technological implications, the expansion of AI data centers carries broader societal and environmental considerations. The significant energy consumption of these facilities is a growing concern, prompting calls for more sustainable power sources and innovative cooling technologies. As these data centers proliferate, their impact on local power grids, water resources, and carbon emissions will become increasingly important topics for public discourse and regulatory oversight.

Future Outlook for Specialized AI Providers

The robust investment in Lambda suggests a strong belief in the continued growth and strategic importance of specialized AI infrastructure providers. As AI technology matures and becomes even more integral to global economies, access to state-of-the-art compute resources will remain paramount. The question for these providers will be how to maintain their competitive edge against the immense resources of hyperscale cloud giants, while also navigating potential shifts in hardware technology or AI architectural paradigms.

The discussion around Lambda’s potential IPO, which was circulating for months prior to this massive funding round, indicates the maturity and investor confidence in the sector. While the company declined to comment on its valuation or future public listing plans, such a significant private capital injection often provides a company with more flexibility and time to scale operations without the immediate pressures of public markets. However, it also sets a higher bar for future growth and profitability expectations.

Ultimately, the $1.5 billion investment in Lambda is more than just a financial transaction; it’s a testament to the foundational role that specialized AI infrastructure plays in powering the next generation of artificial intelligence. It highlights the strategic imperative for technology companies to secure compute capacity, the intense competition shaping the market, and the profound economic and technological shifts being driven by the ongoing AI revolution.