Amazon Web Services (AWS) has significantly expanded its suite of artificial intelligence offerings, unveiling a comprehensive array of new features designed to empower enterprise customers in developing and fine-tuning their own bespoke large language models (LLMs). These strategic enhancements, announced at the company’s annual AWS re:Invent conference, underscore a critical shift in the AI landscape: moving beyond generic foundational models towards highly specialized, domain-aware intelligence tailored to unique business needs. The latest advancements, integrated within Amazon Bedrock and Amazon SageMaker AI, aim to democratize advanced AI customization, making it more accessible and less resource-intensive for developers across various industries.

The Evolving Landscape of Enterprise AI

The rapid ascent of generative artificial intelligence has undeniably reshaped technological priorities for businesses worldwide. Initially, the focus was largely on the raw power and general applicability of frontier LLMs, such as those offered by OpenAI, Anthropic, and Google. These models demonstrated unprecedented capabilities in text generation, summarization, translation, and coding, sparking a wave of innovation. However, as enterprises began integrating these powerful tools into their operations, a common challenge emerged: general-purpose models, while impressive, often lacked the precise domain knowledge, contextual understanding, and brand voice necessary for optimal performance in highly specialized business environments. For instance, a general LLM might struggle with the nuances of medical terminology, specific financial regulations, or proprietary manufacturing processes without extensive adaptation.

This realization has catalyzed a strategic pivot within the AI development community and among major cloud providers. The emphasis is no longer solely on who can build the largest or most capable foundational model, but rather on who can provide the most effective and efficient pathways for enterprises to customize these models to their specific data, workflows, and objectives. This ensures not only better performance but also addresses critical concerns around data privacy, intellectual property, and competitive differentiation.

AWS’s Journey in Machine Learning and AI

AWS has been a formidable player in the machine learning space for over a decade, long before the recent generative AI boom. Its journey began with the provision of foundational compute and storage services, which were then leveraged by early adopters of machine learning. The launch of Amazon SageMaker in 2017 marked a significant milestone, offering a fully managed service to build, train, and deploy machine learning models at scale. SageMaker aimed to simplify the complex lifecycle of ML development, providing tools for data labeling, model training, hyperparameter tuning, and deployment.

With the advent of generative AI, AWS further expanded its portfolio, introducing Amazon Bedrock in 2023. Bedrock serves as a fully managed service that provides access to a selection of foundational models from leading AI companies like Anthropic, AI21 Labs, Cohere, and Meta, alongside Amazon’s own family of models, including Titan. The initial vision for Bedrock was to create a "model zoo," offering choice and flexibility to enterprises experimenting with various LLMs. However, the market quickly signaled a demand for more than just access; it required control and customization. The latest announcements at re:Invent build directly upon this foundation, moving AWS from a provider of foundational models to a facilitator of highly specialized AI creation.

Simplifying Custom Model Development with SageMaker AI

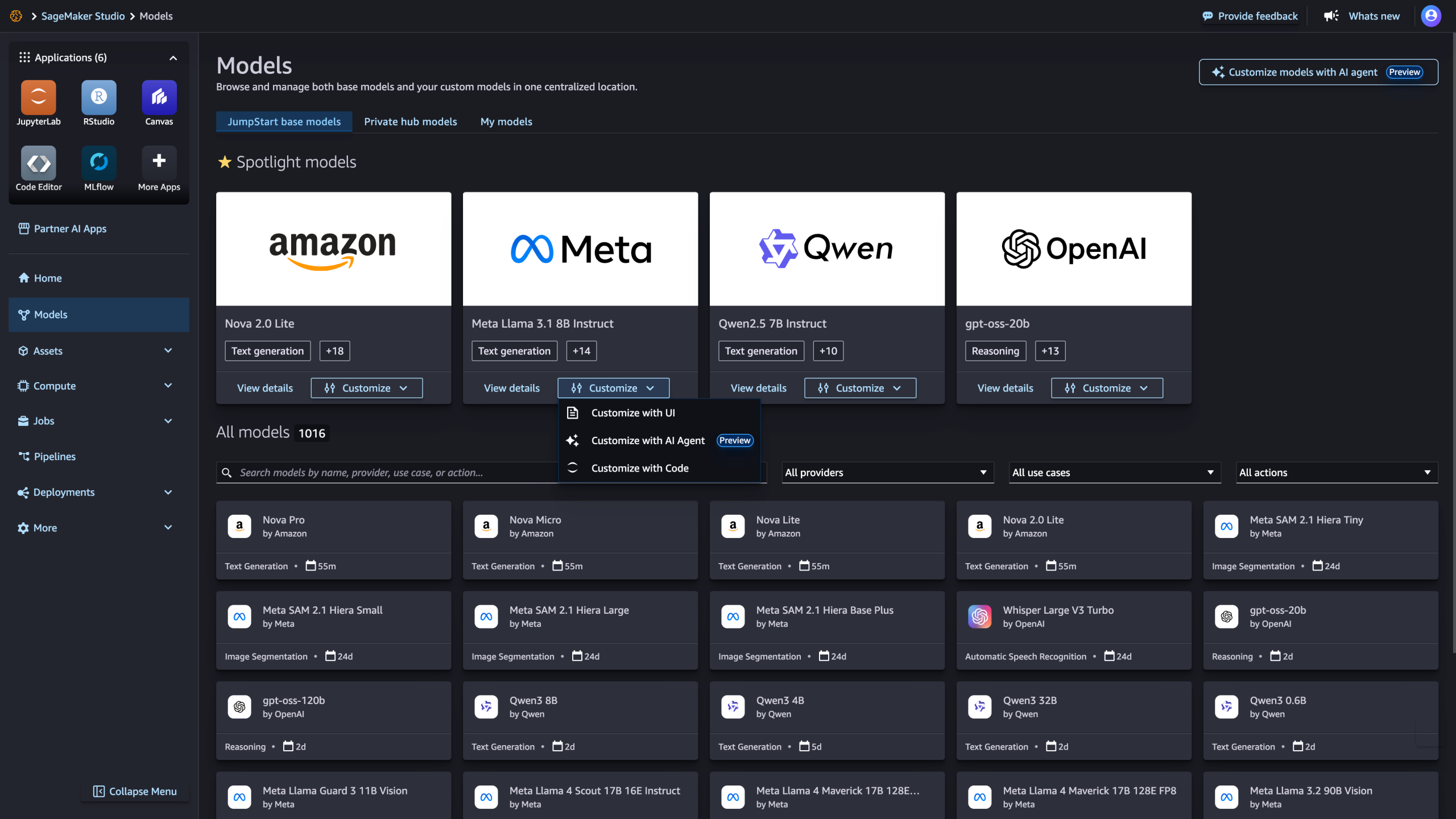

At the forefront of AWS’s new customization push is the introduction of serverless model customization within Amazon SageMaker AI. This innovative capability significantly lowers the barrier to entry for developers, abstracting away the complexities of managing underlying compute resources and infrastructure. Traditionally, fine-tuning large models required a deep understanding of GPU provisioning, cluster management, and resource optimization – tasks that could be daunting and time-consuming for many development teams. With serverless customization, developers can initiate model building without needing to provision or configure servers, allowing them to focus entirely on the model’s logic and data.

This streamlined approach offers two distinct pathways for customization. The first is a self-guided, point-and-click interface, catering to users who prefer a visual, intuitive experience. This is ideal for data scientists or business analysts looking to quickly iterate on model fine-tuning. The second, more advanced option, is an agent-led experience, currently available in preview. Here, developers can use natural language prompts to guide SageMaker AI through the customization process, essentially "telling" the system what they want to achieve. For example, a developer could prompt, "Fine-tune this model to better understand medical terminology using my labeled clinical data." SageMaker AI then orchestrates the necessary steps, selects appropriate techniques, and executes the fine-tuning process automatically.

This capability is not limited to Amazon’s proprietary Nova models; it also extends to a selection of popular open-source models, including DeepSeek and Meta’s Llama, for which model weights are publicly available. Supporting open-source models is a strategic move, acknowledging the vibrant open-source AI community and offering enterprises the flexibility to leverage models that might better align with their specific licensing requirements or architectural preferences. The ability to fine-tune these models on proprietary data within a secure AWS environment is a significant advantage for enterprises concerned about data sovereignty and confidentiality.

Advanced Fine-Tuning with Bedrock’s Reinforcement Learning

Complementing SageMaker’s serverless capabilities, AWS is also rolling out Reinforcement Fine-Tuning (RFT) within Amazon Bedrock. This advanced technique represents a sophisticated method for optimizing LLM behavior beyond standard supervised fine-tuning. RFT allows developers to steer models towards desired outcomes by providing a reward function or selecting a pre-set workflow. Essentially, instead of simply learning from examples, the model learns through trial and error, receiving "rewards" for generating outputs that align with specified criteria and "penalties" for undesirable ones.

This process is akin to Reinforcement Learning from Human Feedback (RLHF), a technique that has been instrumental in aligning powerful foundational models with human values and instructions. By automating this complex customization process from start to finish, Bedrock’s RFT significantly reduces the engineering effort required to achieve highly nuanced model behavior. For instance, an enterprise could use RFT to fine-tune a customer service chatbot to be more empathetic, concise, or adhere strictly to brand guidelines, going beyond what simple instruction tuning might achieve. This capability promises to unlock new levels of precision and control over how LLMs interact and perform in real-world applications.

The White-Glove Approach: Nova Forge for Bespoke AI

Recognizing that some enterprises require an even deeper level of partnership and specialization, AWS has also introduced Nova Forge. Announced during AWS CEO Matt Garman’s keynote, Nova Forge is a bespoke service where AWS’s own AI experts will custom-build Nova AI models specifically for enterprise customers. This white-glove service is positioned for large organizations with highly unique, complex requirements that may exceed the scope of self-service fine-tuning tools. Priced at $100,000 per year, Nova Forge signifies a premium offering, providing dedicated AWS resources to train, optimize, and deploy highly specialized models tailored to the customer’s exact specifications.

This service directly addresses a key concern articulated by customers: differentiation. As Ankur Mehrotra, general manager of AI platforms at AWS, noted, "If my competitor has access to the same model, how do I differentiate myself? How do I build unique solutions that are optimized for my brand, for my data, for my use case?" Nova Forge represents the ultimate answer to this question, offering a fully managed, high-touch solution for enterprises seeking a truly unique AI competitive advantage.

Market Impact and Strategic Implications

AWS’s intensified focus on custom LLMs arrives at a crucial juncture in the AI market. While initial surveys indicated that enterprises often preferred models from AI-first companies like Anthropic, OpenAI, and Google Gemini, the landscape is rapidly evolving. The ability to deeply customize models could prove to be a significant competitive differentiator for AWS. As foundational models become increasingly commoditized, the value shifts to the platforms and services that enable enterprises to leverage these models most effectively for their specific contexts.

This strategy has several profound implications:

- Democratization of Advanced AI: By simplifying the customization process through serverless computing and agent-led experiences, AWS is lowering the technical bar for enterprises to build sophisticated, specialized AI. This could lead to a broader adoption of AI across various business functions and industries, not just those with dedicated, large-scale ML engineering teams.

- Competitive Advantage through Specialization: Enterprises can now imbue their LLMs with proprietary knowledge, brand voice, and industry-specific terminology, creating AI applications that are truly unique and difficult for competitors to replicate without similar access to data and customization capabilities. This moves the competitive battleground from general AI prowess to domain-specific excellence.

- Data Sovereignty and Security: Performing fine-tuning within the secure confines of an AWS cloud environment, using proprietary data that never leaves the customer’s control, addresses critical concerns around data privacy, compliance, and intellectual property protection. This is a significant advantage for highly regulated industries.

- Skill Gap Mitigation: The simplified tools reduce the need for highly specialized machine learning engineers for certain customization tasks, allowing existing development teams to build and iterate on AI models more efficiently.

- Economic Efficiency: For many niche applications, fine-tuning a foundational model is significantly more cost-effective and faster than training a model from scratch. AWS’s offerings make this process more accessible.

However, challenges remain. AWS must effectively communicate the value proposition of its customization tools and demonstrate their superiority against a backdrop of fierce competition. While the tools simplify the process of customization, the cost of compute for extensive training and fine-tuning, especially for very large models, can still be substantial, as evidenced by the Nova Forge pricing. The ultimate success will depend on how quickly and effectively enterprises can translate these new capabilities into tangible business outcomes.

In essence, AWS is not just providing a set of new features; it is offering a strategic pathway for enterprises to move beyond generic AI consumption to bespoke AI creation. This shift promises to reshape how businesses interact with and leverage artificial intelligence, marking a new frontier where personalized, domain-specific intelligence drives innovation and competitive differentiation across every sector.