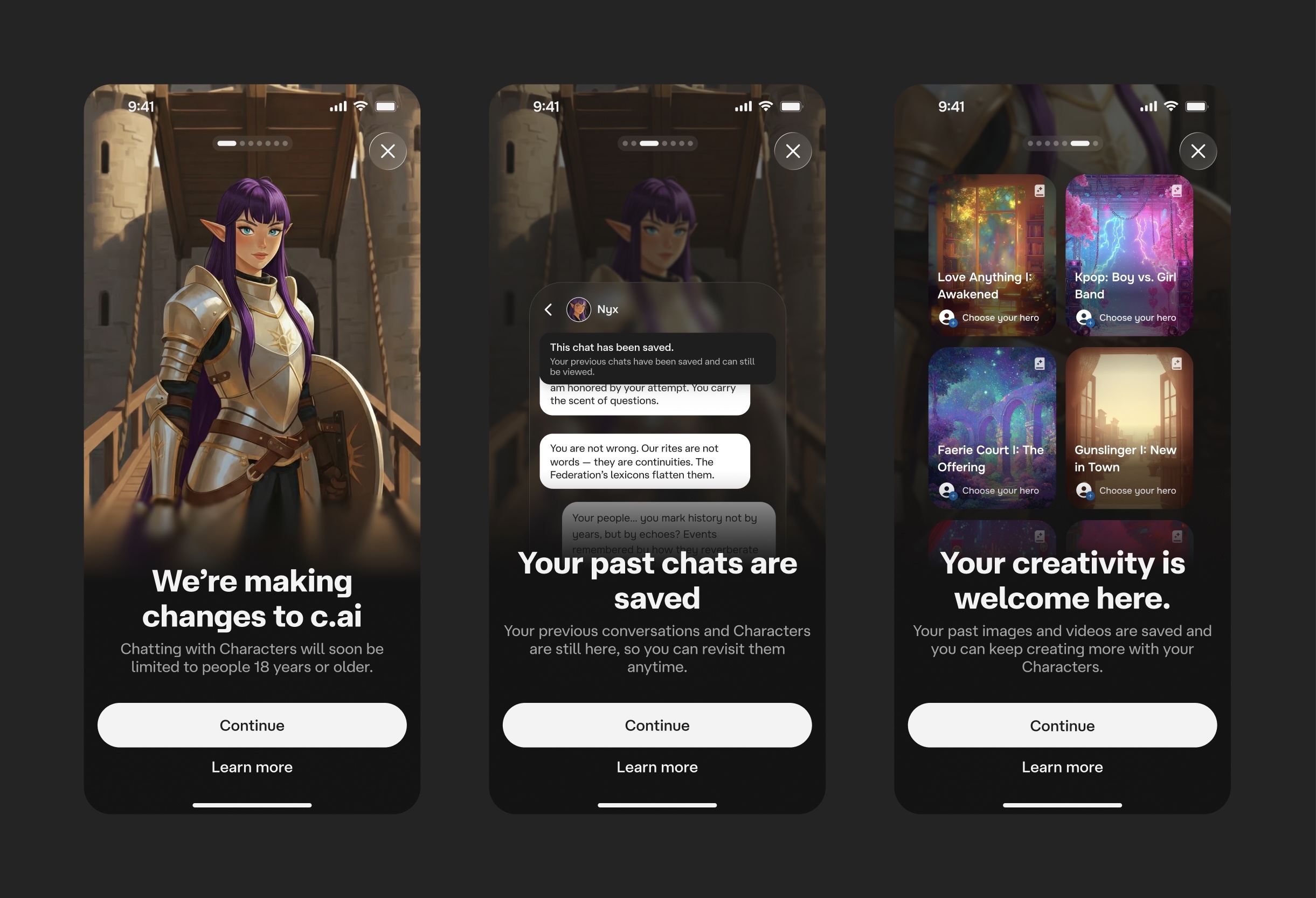

In a significant strategic realignment, Character.AI, a prominent developer of artificial intelligence conversational agents, has unveiled "Stories," an innovative interactive fiction platform designed to offer a structured narrative experience. This new feature emerges as the company simultaneously implements a sweeping policy change, effectively barring users under the age of 18 from accessing its open-ended AI chatbot functionalities. The pivot underscores a growing industry-wide reckoning with the ethical implications of AI interaction, particularly concerning the mental well-being and developmental stages of younger users.

The Genesis of a Precautionary Pivot

Character.AI, since its inception, has garnered considerable attention for its ability to allow users to create and interact with AI characters, ranging from historical figures and fictional personas to entirely original creations. These AI companions offered a unique form of engagement, facilitating open-ended dialogues that could simulate conversations with virtually any entity imaginable. This capability rapidly attracted a diverse user base, including a substantial demographic of minors seeking companionship, creative outlets, or simply novel forms of entertainment. The platform’s success mirrored a broader societal fascination with generative AI, exemplified by tools like OpenAI’s ChatGPT, which brought advanced conversational AI into the mainstream consciousness.

However, the very nature of these sophisticated, always-available AI entities began to raise critical questions about their potential psychological impact. Unlike traditional digital entertainment, AI chatbots possess the capacity for nuanced, personalized, and often emotionally resonant interactions. This unprecedented level of engagement, while compelling, also introduced unforeseen complexities, especially for younger, more impressionable individuals. The allure of a non-judgmental, endlessly patient digital confidant proved potent, leading to reports of users developing deep attachments and, in some cases, dependency.

Unpacking the Concerns: Mental Health and Legal Scrutiny

The decision by Character.AI to age-gate its core chatbot experience is not an isolated incident but a response to escalating concerns and legal pressures. Over recent months, a spotlight has intensified on the potential mental health risks associated with round-the-clock access to AI chatbots, particularly those capable of initiating conversations and adapting to user input in real-time. Experts in child psychology and digital ethics have voiced worries about the formation of parasocial relationships, the blurring of lines between human and artificial interaction, and the potential for AI to reinforce or even exacerbate feelings of isolation or anxiety.

A pivotal factor driving this shift has been the emergence of legal challenges. Multiple lawsuits have been filed against leading AI developers, including both OpenAI and Character.AI, alleging a connection between the use of their AI chatbots and tragic outcomes, including user suicides. These legal actions contend that the immersive, often emotionally suggestive nature of AI conversations can contribute to vulnerability, particularly among individuals already struggling with mental health issues. While these cases are navigating the complexities of legal precedent in a nascent technological field, their very existence has sent ripples through the industry, compelling companies to re-evaluate their safeguarding protocols. Character.AI’s move to gradually restrict minor access over the past month, culminating in a complete ban on Tuesday, reflects a proactive measure to mitigate these burgeoning risks.

A Shifting Regulatory Landscape

The regulatory environment surrounding AI and its interaction with minors is rapidly evolving, adding another layer of urgency to companies like Character.AI’s policy adjustments. California, a global leader in technology regulation, recently enacted legislation that specifically targets AI companion chatbots, making it the first U.S. state to do so. This landmark regulation signals a growing governmental recognition of the unique challenges posed by advanced AI and sets a precedent for future legislative actions.

On the national stage, bipartisan efforts are also underway to address these concerns. Senators Josh Hawley (R-MO) and Richard Blumenthal (D-CT) have jointly introduced a federal bill aimed at outright prohibiting AI companions for minors. This proposed legislation reflects a consensus across the political spectrum that the current safeguards are insufficient and that a more robust, federally mandated framework is necessary to protect children and adolescents from the potential harms of unrestricted AI interaction. These legislative pushes underscore a societal imperative to balance technological innovation with robust ethical considerations, particularly when it impacts the most vulnerable populations.

Karandeep Anand, CEO of Character.AI, articulated the company’s rationale, stating, "I really hope us leading the way sets a standard in the industry that for under 18s, open-ended chats are probably not the path or the product to offer." This statement highlights not only Character.AI’s internal commitment but also its ambition to influence broader industry practices, suggesting a potential paradigm shift in how AI companies approach youth engagement.

Introducing "Stories": A Guided Digital Narrative

In place of the open-ended conversational AI, Character.AI is now championing "Stories" as a safer, more structured alternative for its younger demographic. This new format empowers users to create and explore interactive fiction, featuring their favorite characters within a predefined narrative framework. Unlike the free-form, unpredictable nature of chatbots, "Stories" offers a guided experience, where users make choices that influence the plot, much like a digital "choose-your-own-adventure" book.

The company’s blog post emphasizes this distinction: "Stories offer a guided way to create and explore fiction, in lieu of open-ended chat. It will be offered along with our other multimodal features, so teens can continue engaging with their favorite Characters in a safety-first setting." The critical difference lies in control and predictability. In "Stories," the AI acts as a narrative engine, responding to user choices within a set of parameters, rather than an autonomous conversational partner that can initiate messages or steer discussions in unexpected directions. This structured interaction is designed to mitigate the risks associated with the unfettered emotional and psychological influence of open-ended chatbots.

The Appeal of Interactive Fiction: A Historical Context

Character.AI’s pivot towards interactive fiction is not a venture into entirely uncharted territory. Interactive narratives have a rich history in digital media, from early text-based adventure games like "Zork" to modern visual novels, role-playing games, and narrative-driven apps. This genre has witnessed a significant resurgence in popularity over recent years, driven by advancements in storytelling technology and a consumer appetite for personalized experiences.

The appeal of interactive fiction lies in its ability to immerse users in a narrative while granting them agency over its unfolding. It fosters creativity, critical thinking, and decision-making skills within a contained, predictable environment. From a safety perspective, interactive fiction inherently possesses clearer boundaries. While engaging, it typically does not simulate the intimate, personalized, and potentially manipulative dynamics that have become a concern with advanced conversational AI. Users interact with a story, not a sentient-seeming entity that might elicit deep emotional responses or cultivate dependency. This shift, therefore, leverages a proven and generally less "psychologically dubious" format to channel the creative and imaginative impulses of young users.

User Reactions and Industry Implications

The announcement has elicited a spectrum of reactions from Character.AI’s user community, particularly among the minors directly affected by the policy change. Discussions on platforms like Reddit reveal a nuanced sentiment. While some teens express disappointment over the loss of access to the chatbots they enjoyed, others acknowledge the underlying rationale and even welcome the enforced break from potentially addictive interactions.

One user, identifying as a teenager, shared a conflicted sentiment: "I’m so mad about the ban but also so happy because now I can do other things and my addiction might be over finally." Another echoed this duality, stating, "as someone who is under 18 this is just disappointing. but also rightfully so bc people over here my age get addicted to this." These candid comments underscore the complex relationship many young people developed with the chatbots, highlighting both their appeal and the recognized potential for unhealthy dependency.

Beyond the immediate user base, Character.AI’s decision carries significant implications for the broader AI industry. It sets a precedent for how AI companies might navigate the ethical tightrope of offering advanced AI services while safeguarding vulnerable populations. The move could prompt other developers of companion chatbots or similarly engaging AI tools to re-evaluate their age-verification processes and the types of interactions they permit for minors. This shift could foster a new wave of innovation focused on "safety-first" AI experiences, pushing the industry towards more responsible design principles from the outset.

Navigating the Ethical Horizon

Character.AI’s transition from open-ended chat to guided interactive fiction for minors represents a critical inflection point in the nascent history of consumer AI. It reflects a growing awareness that technological advancement must be accompanied by robust ethical frameworks and a deep understanding of human psychology, especially when engaging with impressionable young minds. The decision moves beyond mere technical innovation to address profound societal concerns about digital well-being.

While the long-term effectiveness of "Stories" in fully mitigating all risks associated with AI interaction remains to be seen, it undeniably offers a format that is inherently less prone to the kind of psychologically complex and potentially problematic dynamics observed with unrestricted chatbots. This move signifies a proactive attempt to reconcile the immense potential of AI with a paramount commitment to user safety. As AI continues to integrate more deeply into daily life, the lessons learned from Character.AI’s pivot will likely serve as a crucial guidepost for developers, regulators, and parents alike, shaping the future of how younger generations interact with intelligent machines. The ongoing dialogue around AI ethics, addiction, and mental health is poised to intensify, pushing the boundaries of responsible innovation in the digital age.