Bluesky, the rapidly expanding decentralized social media platform, has published its inaugural transparency report, offering an unprecedented look into its operational dynamics, user engagement, and the escalating demands placed upon its Trust & Safety team throughout 2025. This comprehensive document, a significant milestone for the burgeoning platform, details actions undertaken across various initiatives, including age-assurance compliance, vigilance against influence operations, and the implementation of automated content labeling systems. The report underscores Bluesky’s swift ascent in the social media landscape, simultaneously revealing the inherent challenges of content moderation and regulatory compliance that accompany such rapid expansion.

The Ascent of a Decentralized Alternative

Bluesky emerged from a project initially funded by Twitter in 2019, conceived as an open-source, decentralized social networking protocol. Its development gained significant traction following the tumultuous changes at Twitter (now X) in late 2022, positioning Bluesky as a compelling alternative for users and developers disillusioned with centralized platforms. The platform officially launched its public beta in early 2023, building upon the innovative Authenticated Transfer (AT) Protocol. This protocol distinguishes Bluesky by enabling a "federated" model, where users can choose their hosting provider, migrate their data, and even build custom moderation tools and algorithmic feeds. This architectural choice stands in stark contrast to the monolithic structures of industry giants like X and Meta’s Threads, aiming to give users greater control and foster a more resilient, open internet ecosystem.

The year 2025 marked a period of explosive growth for Bluesky. The platform witnessed an impressive user base expansion of nearly 60%, soaring from 25.9 million users at the close of 2024 to a formidable 41.2 million by the end of 2025. This figure encompasses accounts hosted on Bluesky’s own infrastructure as well as those operating independently as part of the broader AT Protocol network, showcasing the protocol’s growing adoption. This surge in users translated directly into heightened activity; platform participants collectively generated 1.41 billion posts during 2025 alone. Remarkably, this volume represents 61% of all posts ever created on Bluesky since its inception, highlighting the accelerating pace of engagement. Media-rich content also saw a significant boost, with 235 million posts incorporating images or videos, accounting for 62% of all media shared on the platform to date. This rapid expansion, while indicative of success, has inevitably amplified the complexities associated with platform governance and content moderation.

Navigating Content Moderation: A Growing Challenge

As Bluesky’s user base swelled, so did the volume of content moderation reports submitted by its community. The transparency report reveals a substantial 54% increase in user-generated moderation reports in 2025, climbing from 6.48 million in 2024 to 9.97 million. However, Bluesky noted that this rise closely paralleled its overall user growth of 57% during the same period, suggesting that the rate of problematic content relative to active users remained relatively stable. Approximately 3% of the total user base, or 1.24 million individuals, actively contributed to content reporting throughout the year, demonstrating an engaged community’s role in maintaining platform standards.

The reports fell into several key categories, with "misleading" content, predominantly spam, constituting the largest share at 43.73% of the total. Within this category, a staggering 2.49 million reports specifically targeted spam, reflecting a persistent challenge for all social platforms. "Harassment" emerged as the second most reported issue, accounting for 19.93% of complaints. This category, totaling 1.99 million reports, encompassed a spectrum of behaviors. Hate speech represented the largest specific sub-category within harassment, with approximately 55,400 reports, followed by targeted harassment (around 42,520 reports), trolling (29,500 reports), and doxxing (about 3,170 reports). Bluesky’s analysis indicated that a significant portion of harassment reports fell into a "gray area" of antisocial behavior, such as rude remarks, which did not meet the thresholds for more severe classifications like hate speech.

"Sexual content" made up 13.54% of reports, with 1.52 million instances. The majority of these reports concerned content that was not properly labeled with metadata tags, which the AT Protocol uses to allow users to customize their viewing experience. This highlights a user-centric approach to moderation, where explicit content is not necessarily removed but categorized to allow individual preference. A smaller but critical number of reports addressed nonconsensual intimate imagery (approximately 7,520), child abuse content (around 6,120), and deepfakes (over 2,000). Reports related to "violence," totaling 24,670, were further broken down into subcategories such as threats or incitement (about 10,170 reports), glorification of violence (6,630 reports), and extremist content (3,230 reports). Beyond user input, Bluesky’s automated systems proactively flagged an additional 2.54 million potential policy violations, demonstrating a multi-layered approach to content governance.

The report also highlighted a notable success in combating antisocial behavior. Following the implementation of a system designed to identify and reduce the visibility of toxic replies by placing them behind an optional click – a mechanism similar to features found on X – Bluesky observed a 79% decline in daily reports of such behavior. Furthermore, the platform experienced a month-over-month decrease in reports per 1,000 monthly active users, dropping by 50.9% from January to December. These metrics suggest that strategic product interventions can significantly impact the overall health of a platform’s discourse.

Escalating Legal and Regulatory Demands

Bluesky’s transparency report also brings into focus the increasing pressure from external legal and governmental entities. The platform experienced a fivefold increase in legal requests during 2025, soaring from 238 requests in 2024 to 1,470. These demands originated from a diverse range of sources, including law enforcement agencies, government regulators, and private legal representatives. This dramatic rise is indicative of Bluesky’s growing prominence and, more broadly, reflects a global trend of heightened scrutiny on social media platforms by governments and legal systems worldwide.

The increasing volume of legal demands poses unique challenges for decentralized platforms like Bluesky. While its AT Protocol is designed to distribute data and moderation capabilities, the central Bluesky organization, as a host and operator of a significant portion of the network, remains a key point of contact for legal compliance. This situation places Bluesky in a delicate position, balancing its commitment to decentralization and user autonomy with the imperative to adhere to legal obligations across varied jurisdictions. The market impact of such demands is substantial; compliance can be resource-intensive, potentially diverting engineering and legal talent from product development. Socially and culturally, this trend reflects a growing societal expectation for platforms to be accountable for content published within their ecosystems, irrespective of their architectural design. Neutral analytical commentary suggests that as Bluesky continues to scale, managing these legal complexities will become an increasingly critical aspect of its operational strategy, potentially shaping the evolution of its protocol and governance models.

Enforcement Actions and Policy Evolution

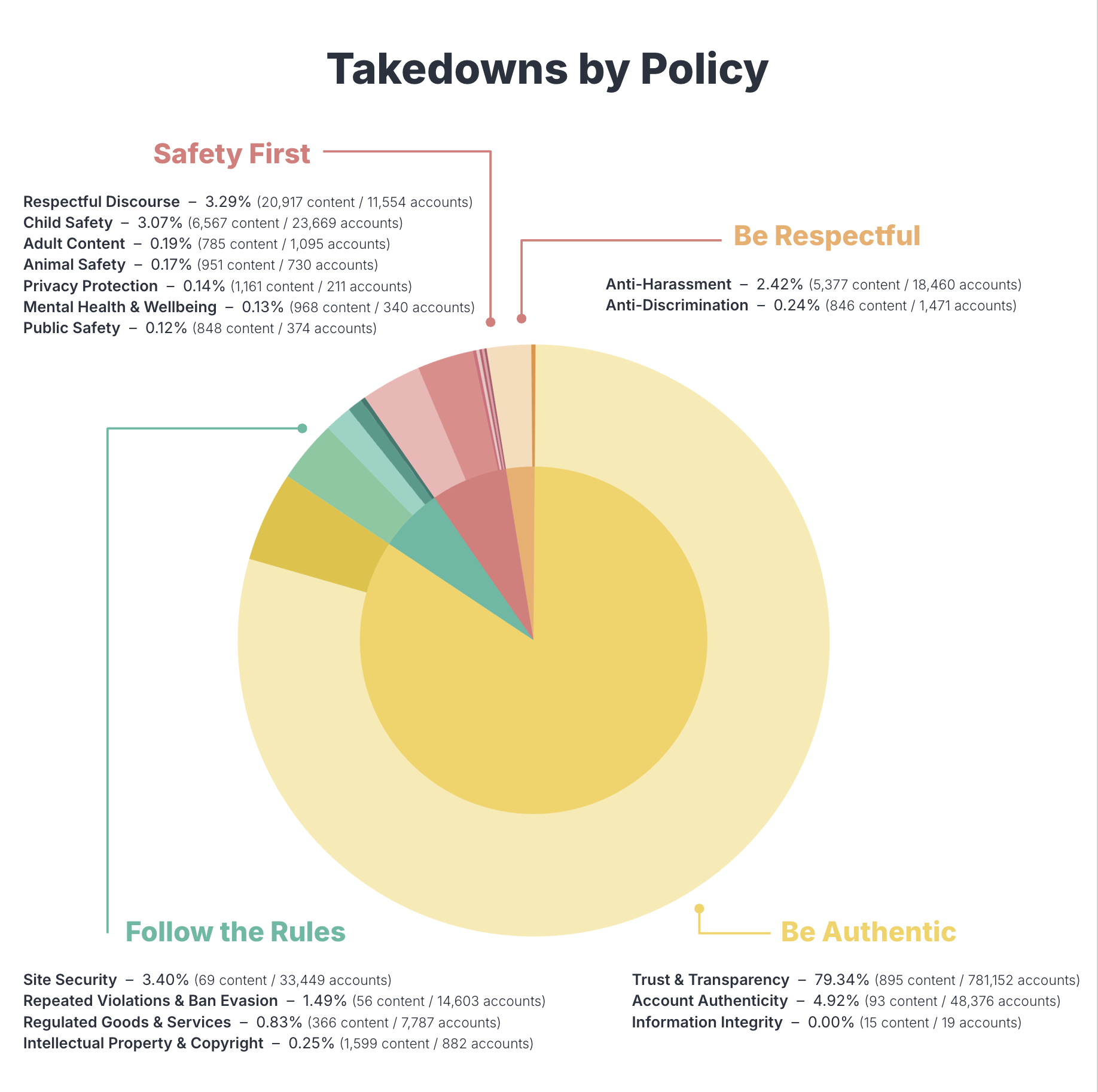

In response to both user reports and its own internal monitoring, Bluesky has evidently adopted a more assertive stance on content enforcement. The company had previously signaled its intent to become more aggressive in its moderation and enforcement policies, a commitment that is clearly reflected in the report’s figures. In 2025, Bluesky took down a total of 2.44 million items, encompassing both accounts and individual content records. This represents a substantial increase from the prior year, during which 66,308 accounts were taken down, with automated tools accounting for 35,842 of those removals. Additionally, human moderators removed 6,334 content records, while automated systems removed 282.

The platform issued 3,192 temporary suspensions in 2025 and permanently removed 14,659 accounts for ban evasion. The majority of these permanent suspensions targeted accounts involved in inauthentic behavior, spam networks, and impersonation, underscoring Bluesky’s efforts to maintain a genuine and trustworthy environment. A specific focus was also placed on combating coordinated disinformation campaigns; the report details the removal of 3,619 accounts suspected of engaging in influence operations, with a strong indication that many originated from Russia.

Bluesky’s enforcement philosophy, however, appears to favor labeling content over outright removal where appropriate. The platform applied 16.49 million labels to content in 2025, marking a 200% year-over-year increase. This contrasts with account takedowns, which grew by 104% from 1.02 million to 2.08 million. The majority of these labels were applied to adult and suggestive content or nudity, aligning with the AT Protocol’s design to empower users with greater control over their content consumption through custom moderation filters. This approach represents a nuanced strategy, seeking to respect user autonomy and content diversity while still mitigating harm. Neutral analytical commentary suggests that this emphasis on labeling reflects the core decentralized ethos of Bluesky, attempting to provide tools for user-level content filtering rather than dictating a universal standard of acceptable content. This model, while offering flexibility, may also present challenges in complying with evolving international regulations that often mandate immediate removal of certain types of content.

The Future of Decentralized Social Media Governance

Bluesky’s inaugural transparency report serves as a critical document, not just for the platform itself but for the broader discussion surrounding decentralized social media. The challenges detailed in the report – from managing burgeoning user reports to navigating a surge in legal demands – mirror those faced by established centralized platforms, yet they are amplified by Bluesky’s unique architectural principles. The market impact of successful decentralization could be transformative, potentially democratizing control over online discourse and fostering greater innovation. However, the report highlights that even a decentralized model cannot escape the fundamental need for robust content governance and legal compliance.

Culturally, the report reflects an ongoing tension between the ideals of free expression, user control, and the societal imperative to mitigate harm, misinformation, and illicit content. Bluesky’s strategy of prioritizing user-controlled moderation through labeling, while demonstrating its commitment to decentralization, also places a significant burden on individual users to curate their own experience. Neutral analytical commentary suggests that the future success of Bluesky, and indeed other decentralized social networks, will depend on their ability to scale their governance mechanisms effectively, finding a sustainable balance between platform responsibility and the core tenets of openness and user empowerment. This first transparency report marks a vital step in that journey, providing a benchmark for accountability and initiating a public dialogue about the complexities inherent in building a more open and equitable internet. As the platform continues its trajectory of growth, subsequent reports will undoubtedly offer further insights into the evolving landscape of decentralized social media governance.