The digital landscape for children’s online safety has been further complicated by a recent legal challenge, as the Attorney General of Texas, Ken Paxton, initiated a lawsuit against the popular online gaming platform Roblox. This action, announced late Thursday, accuses the company of misleading parents regarding the inherent risks on its platform and prioritizing profits over the well-being of its young user base. The lawsuit represents a significant escalation in the ongoing debate surrounding the responsibilities of technology companies in safeguarding minors in virtual environments.

The Allegations Unveiled

In a forceful statement accompanying the legal filing, Attorney General Paxton did not mince words, asserting that Roblox has consciously chosen to prioritize what he termed "pixel pedophiles" and financial gain above the fundamental safety of children. He further characterized the platform as having evolved into a "breeding ground for predators," a stark accusation that underscores the gravity of the state’s concerns. Paxton emphasized the imperative to prevent platforms like Roblox from operating as unchecked digital havens for those seeking to exploit children. He unequivocally stated that any corporation found to be enabling child abuse would confront the full and unwavering force of the law, signaling a firm stance from the Texas Attorney General’s office.

A Pattern of Legal Challenges

This lawsuit from Texas does not emerge in a vacuum; rather, it follows a growing chorus of legal and regulatory scrutiny directed at Roblox. Previously, attorneys general in states such as Louisiana and Kentucky have launched similar legal actions, citing comparable concerns about the platform’s child safety protocols. Beyond these state-level governmental actions, Roblox has also faced multiple private lawsuits in California, Texas, and Pennsylvania. The consistent thread through these various legal challenges has been the accusation that Roblox has failed to implement sufficient child safety measures, thereby exposing minors to a range of dangers including grooming, explicit content, and violent or abusive material. This pattern of litigation suggests a systemic issue that extends beyond isolated incidents, painting a picture of a company struggling to effectively manage the complex challenges of a user-generated content platform catering predominantly to children.

Roblox: A Digital Ecosystem for Millions

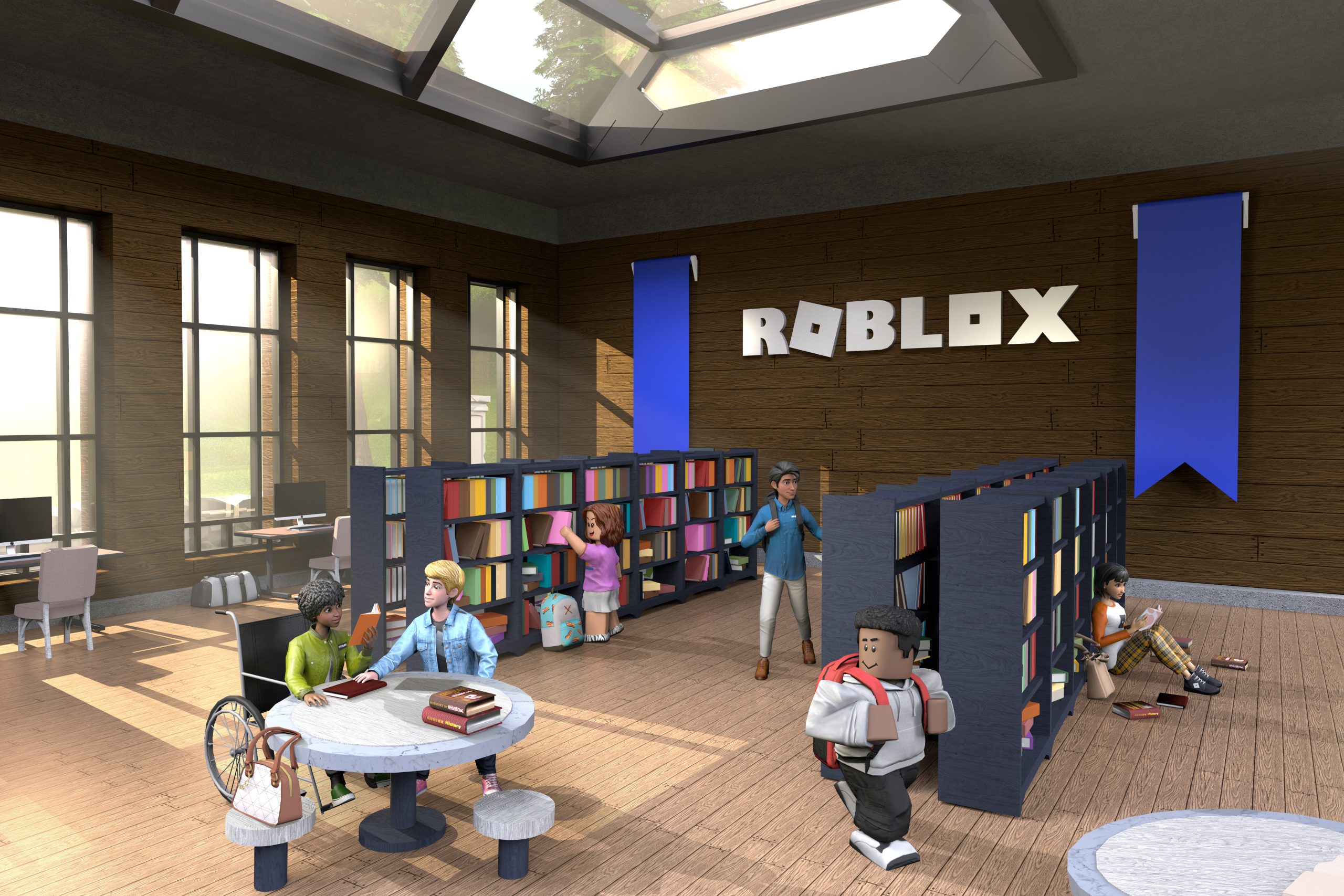

To understand the scope of these allegations, it is crucial to appreciate the sheer scale and nature of Roblox. Launched in 2006, Roblox is not merely a game but a vast online platform where users, primarily children and teenagers, can create their own games and virtual experiences, play games created by others, and socialize. It operates on a "metaverse" model, allowing users to build entire worlds and interact within them. As of September 30, 2025, Roblox boasted an impressive 151.5 million daily active users worldwide, a testament to its pervasive influence among the younger demographic.

The platform’s success is built upon its user-generated content (UGC) model, empowering millions of developers—many of whom are also children—to create and monetize their virtual worlds and items using the platform’s virtual currency, Robux. This decentralized creation model fosters immense creativity and a dynamic user experience, but it also presents unparalleled moderation challenges. The sheer volume and diversity of content generated daily make it incredibly difficult to monitor every interaction, every game, and every chat for inappropriate or harmful material. This unique architecture places Roblox at the forefront of the debate concerning platform responsibility in the age of digital co-creation and immersive virtual experiences.

The Broader Landscape of Online Child Safety

The legal action against Roblox reflects a broader societal anxiety regarding the safety of children in increasingly immersive and interactive online environments. The rise of UGC platforms, coupled with the nascent development of the "metaverse," has introduced unprecedented complexities for parents, educators, and policymakers alike. Experts in child psychology and digital safety frequently highlight the unique vulnerabilities of minors online, including their developing critical thinking skills, susceptibility to influence, and potential difficulty in distinguishing between real and virtual threats.

Online grooming, exposure to age-inappropriate content, and cyberbullying are persistent threats that platforms are expected to mitigate. The challenge for companies like Roblox is monumental: how to foster an open, creative, and engaging environment while simultaneously deploying robust, scalable, and effective safeguards against a constantly evolving array of harmful behaviors. This balancing act is further complicated by privacy concerns related to extensive monitoring and age verification methods. The ongoing public discourse often centers on whether technology companies are doing enough, or if their business models inherently conflict with the highest standards of child protection.

Regulatory Momentum: A Global Push for Protection

In response to these escalating concerns, governments globally have begun to enact increasingly stringent laws and regulations aimed at compelling online platforms to enhance child safety. This marks a significant shift from a largely self-regulated internet to one with more explicit governmental oversight. A prime example is the United Kingdom’s Online Safety Act, which began enforcement recently, mandating that social platforms implement robust age verification and content moderation systems. Similarly, Mississippi has passed an age-assurance law, requiring platforms to verify users’ ages before granting access.

Other U.S. states are following suit, with Arizona, Wyoming, South Dakota, and Virginia actively considering or implementing similar legislative frameworks. These laws typically aim to force platforms to verify users’ ages, restrict access to certain content for minors, and impose greater accountability for harmful material. This legislative trend underscores a growing consensus among lawmakers that the digital realm requires a more proactive and legally enforceable approach to protecting its youngest users, signaling a potential paradigm shift for how online platforms operate and self-regulate.

Roblox’s Defensive Measures and Industry Protocols

In the face of intensifying scrutiny and accumulating lawsuits, Roblox has not remained idle. The company has publicly outlined a series of significant investments and implementations aimed at bolstering its child safety infrastructure. These measures include the deployment of an age-estimation system, which utilizes selfie scans and facial feature analysis to approximate a user’s age, and the introduction of standardized content and maturity labels, developed in partnership with organizations like the International Age Rating Coalition (IARC), to help users and parents understand the appropriateness of experiences.

Furthermore, Roblox has invested in an AI system designed to detect early signals of child endangerment, alongside introducing specific safeguards tailored for teenage users. The platform has also implemented more stringent content controls, including a policy change that restricts users under 13 from messaging others outside of games. Beyond these, Roblox offers a suite of parental controls, tools that allow users to restrict communications, and advanced technology that identifies when a large number of users are violating platform rules.

In its official response to the Texas lawsuit, Roblox expressed disappointment, stating that it has implemented "industry-leading protocols" to protect its users and combat malicious activity. A company spokesperson asserted, "We are disappointed that, rather than working collaboratively with Roblox on this industry-wide challenge and seeking real solutions, the AG has chosen to file a lawsuit based on misrepresentations and sensationalized claims." The company reiterated its deep commitment to child safety, noting that its policies are purposefully stricter than those on many other platforms. They highlighted their prohibition of image and video sharing in chat, use of filters to block personal information exchange, and continuous monitoring of communications by trained teams and automated tools to detect and remove harmful content.

The Stakes: Social, Cultural, and Economic Impacts

The outcome of the Texas lawsuit, alongside ongoing legal and regulatory actions, carries profound implications across social, cultural, and economic spheres. Socially, the erosion of parental trust in major platforms like Roblox could lead to widespread restrictions on children’s online access, impacting their digital literacy and social development in an increasingly connected world. The constant exposure to news of online dangers also contributes to parental anxiety, shaping broader societal norms around digital parenting.

Culturally, how these platforms are regulated and how they respond will define the future of youth online culture. If platforms fail to adequately protect children, it could stifle innovation in user-generated content and metaverse development, as companies become overly cautious. Conversely, effective safeguards could foster a more secure and enriching online environment, allowing creativity to flourish without undue risk.

Economically, the stakes for Roblox are substantial. Beyond potential financial penalties and legal costs, a negative public perception regarding child safety could significantly impact its brand reputation, user acquisition, and retention, particularly among younger demographics and their parents. For the broader tech industry, particularly companies reliant on UGC and aspiring to build metaverses, these cases set critical precedents. They define the expected standards for child protection, potentially influencing future product development, moderation strategies, and investment in safety technologies across the entire sector.

Looking Ahead: The Future of Digital Safeguards

The lawsuit initiated by the Texas Attorney General against Roblox serves as a powerful reminder of the complex and evolving challenges inherent in safeguarding children in the digital age. As virtual worlds become more sophisticated and user-generated content platforms continue to expand their reach, the tension between fostering open creativity and ensuring robust protection for minors will only intensify. The outcome of this and similar legal battles will likely shape the regulatory landscape for years to come, influencing how technology companies design their platforms, manage user interactions, and ultimately, prioritize the well-being of their youngest users. It underscores a global demand for greater accountability and transparency from digital platforms, pushing them towards a future where innovation and child safety are not mutually exclusive, but intrinsically linked.