The relentless advancement of artificial intelligence, particularly in the realm of large language models and generative AI, is pushing the boundaries of computational power and, consequently, generating unprecedented thermal challenges within data centers. A recent revelation from Nvidia, accompanying the announcement of its anticipated Rubin series of Graphics Processing Units (GPUs) slated for a 2027 release, underscored this looming crisis: racks incorporating the high-performance Ultra version of these chips are projected to consume an astonishing 600 kilowatts of electricity. This power draw is nearly double that of some of the most powerful electric vehicle charging stations available today, signaling a critical inflection point for data center infrastructure. As these specialized computing environments become increasingly power-intensive, the fundamental question of how to efficiently dissipate the immense heat produced becomes paramount. In response to this escalating demand, innovative solutions are emerging, with one startup, Alloy Enterprises, proposing an inventive approach involving precisely engineered stacks of metal to tackle the formidable cooling problem.

The Unprecedented Power Demands of AI

The exponential growth of artificial intelligence is fundamentally reshaping the landscape of modern computing. AI workloads, characterized by their highly parallelizable nature and the need to process vast datasets, primarily rely on powerful GPUs. Training complex neural networks, inferencing sophisticated models, and supporting the myriad applications of generative AI demand immense computational resources. Each new generation of AI hardware, designed to deliver higher performance and faster processing speeds, inherently consumes more electrical power. This increased power consumption directly translates into a proportional increase in waste heat generated by the chips.

For decades, data centers primarily relied on traditional air-cooling systems. Rows of servers, often housed in hot aisle/cold aisle configurations, used computer room air conditioners (CRACs) or computer room air handlers (CRAHs) to circulate chilled air and remove heat. This method proved effective for general-purpose computing, where individual server racks typically consumed between 5 to 15 kilowatts. However, as chip densities increased and specialized accelerators like GPUs became more prevalent, the heat generated by a single rack began to outstrip the capabilities of conventional air cooling. The density of power required for AI infrastructure means that racks are now reaching 480 kilowatts and rapidly approaching the 600-kilowatt threshold, a level at which air cooling becomes entirely impractical and inefficient. This shift necessitates a paradigm change in thermal management strategies, moving away from circulating air and towards more direct, liquid-based cooling methods.

A Brief History of Data Center Thermal Management

The evolution of data center cooling reflects the broader trajectory of computing power. In the early days, simple room air conditioning was often sufficient. As server density increased in the late 20th and early 21st centuries, the focus shifted to optimizing airflow within the data center, leading to designs like hot aisle containment and cold aisle containment. Raised floors facilitated the delivery of cool air, and CRAC units became standard.

By the mid-2000s, with the rise of high-performance computing (HPC) and early forms of specialized processing, direct-to-chip liquid cooling began to appear in niche applications. This involved circulating a dielectric fluid directly over the hot components or through cold plates attached to them, then transferring that heat to a facility-level cooling loop. However, these solutions were complex and costly, largely confined to supercomputing clusters. The mainstream data center market continued to rely on air.

The explosion of AI in the 2010s, particularly with the advent of deep learning and the critical role of GPUs, accelerated the demand for more robust cooling. GPUs, inherently designed for parallel processing, generate significantly more localized heat than traditional CPUs. This led to a gradual adoption of hybrid cooling solutions, where air cooling handled most components, but liquid cooling, often in the form of rear-door heat exchangers or direct-to-chip cold plates for GPUs, managed the most thermally demanding parts. Today, as power densities soar, liquid cooling is no longer a niche solution but a critical necessity for maintaining operational stability and performance in AI-driven data centers.

Alloy Enterprises’ Innovative Approach to Thermal Dissipation

Amidst this escalating thermal challenge, Alloy Enterprises has emerged with a proprietary technology designed to create highly efficient cooling plates. The company’s innovation centers on transforming sheets of copper into robust, solid cooling plates specifically tailored for GPUs and, crucially, for the myriad peripheral chips that support them. These supporting components—including memory modules, networking hardware, and power delivery units—traditionally accounted for approximately 20% of a server’s cooling load. In a data center environment where racks drew a modest 120 kilowatts, this 20% was often manageable with less intensive cooling methods. However, as Ali Forsyth, co-founder and CEO of Alloy Enterprises, highlighted, the situation changes drastically when racks approach 480 kilowatts and trend towards 600 kilowatts. At these elevated power levels, engineers must find effective liquid cooling solutions for every heat-generating component, from RAM to networking chips, areas where readily available, high-performance solutions have historically been scarce.

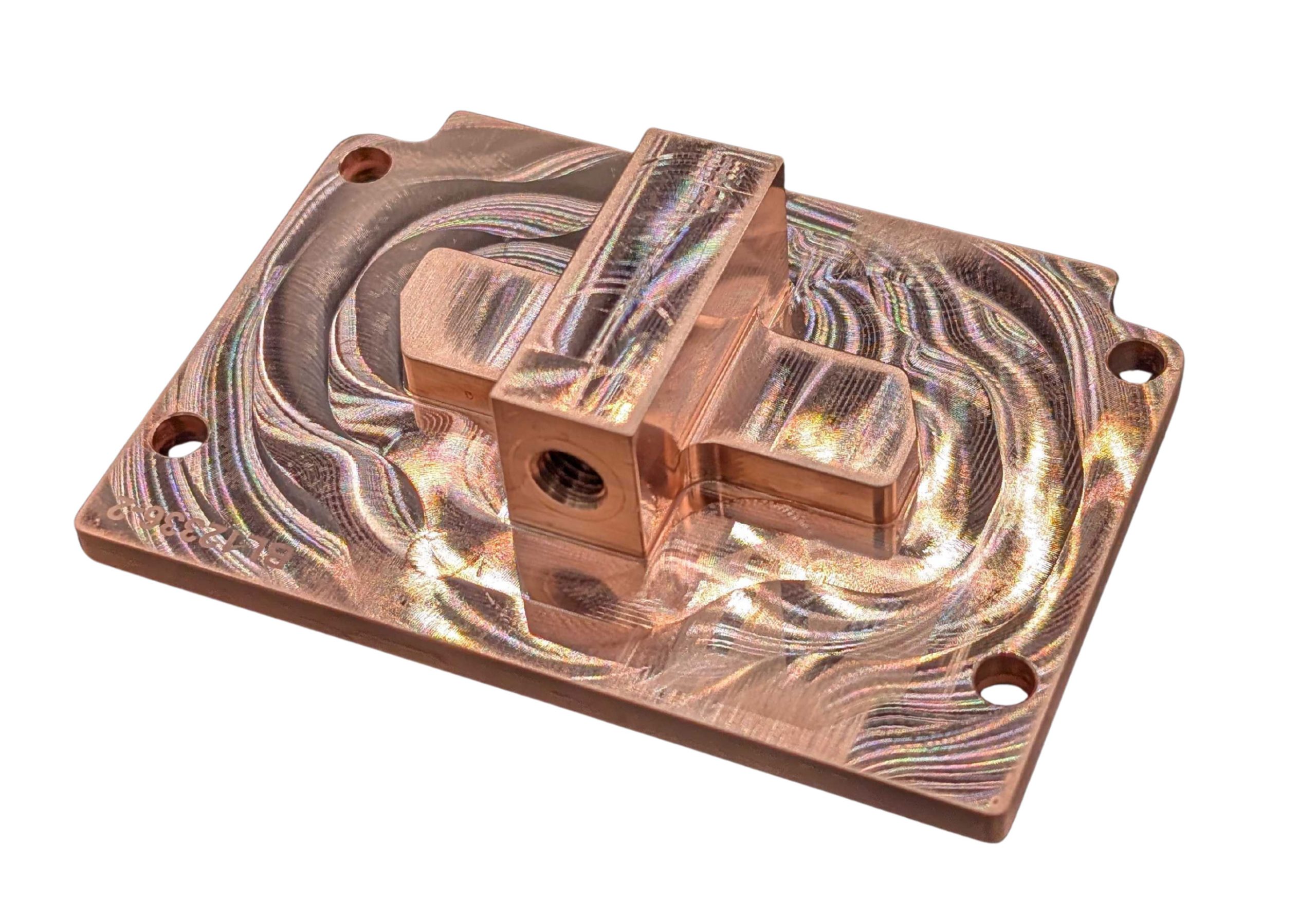

Alloy’s methodology leverages a sophisticated form of additive manufacturing, a process that builds objects layer by layer. Unlike conventional 3D printing, which often uses powdered materials and can result in porous structures, Alloy’s technique, termed "stack forging," utilizes solid sheets of metal. This distinction is critical for the integrity and performance of the resulting cold plates. By applying a combination of intense heat and pressure, the company forces these individual copper sheets to bond together at a molecular level, effectively creating what is, for all practical purposes, a single, monolithic block of metal. This innovative process results in cold plates that can be precisely engineered to fit into the extremely tight confines of modern server architecture while simultaneously possessing the structural integrity to withstand the high pressures inherent in liquid cooling systems.

The Science Behind Stack Forging Technology

Traditional cold plates are typically produced through machining, a subtractive manufacturing process where material is removed from a larger block to create the desired shape. This method often involves machining two separate halves of a plate, which are then joined together, commonly through a sintering process that fuses metal powders with heat. The inherent drawback of this approach is the creation of a seam where the two halves meet. Under the high pressures and temperatures encountered in advanced liquid cooling systems, these seams represent potential points of failure, increasing the risk of leaks and compromising long-term reliability.

In contrast, Alloy Enterprises’ stack forging method, a type of diffusion bonding, eliminates this vulnerability entirely. By fusing layers of solid copper sheets, the process yields cold plates that are truly seamless. This structural integrity means the resulting copper material exhibits properties equivalent to if it had been machined from a solid block, ensuring maximum strength and durability. As Forsyth explained, the company achieves "raw material properties," meaning the copper retains its intrinsic strength.

Beyond structural integrity, stack forging offers significant advantages in design flexibility and thermal performance. The process allows for the creation of intricate internal geometries with remarkable precision, down to features as small as 50 microns—approximately half the width of a human hair. This level of detail enables engineers to design highly optimized internal channels that maximize the surface area for coolant flow, enhancing heat transfer efficiency. The increased flow paths mean more coolant can pass close to the heat-generating components, leading to superior thermal dissipation. Alloy claims its cold plates deliver a 35% improvement in thermal performance compared to competing products, a crucial metric in the race to manage ever-increasing heat loads.

The manufacturing process within Alloy’s facility begins with preparing and cutting rolls of copper to exact specifications. Laser technology then precisely cuts the intricate features into each individual sheet. To prevent unwanted bonding in certain areas, an inhibitor coating is applied. Once prepared, each meticulously designed slice of the cold plate is precisely registered and stacked. This layered assembly then proceeds into a specialized diffusion bonding machine, where the application of controlled heat and pressure permanently fuses the stacked slices into a single, cohesive piece of metal. This intricate yet highly controlled process underpins the superior performance and reliability of their cold plates.

Market Implications and the Future of Data Center Infrastructure

The burgeoning market for advanced cooling solutions is experiencing a dramatic surge, driven by the insatiable demand for AI compute. As power consumption in data centers skyrockets, the operational expenses associated with cooling—including the energy required to run cooling systems and the water needed for evaporative cooling towers—are becoming significant cost factors. Companies like Alloy Enterprises, offering more efficient thermal management, are positioned to capture a substantial share of this growing market. While stack forging is more expensive than traditional machining, its cost-effectiveness compared to other additive manufacturing techniques like 3D printing, combined with its superior performance and reliability, makes it an attractive proposition for data center operators.

The shift towards liquid cooling impacts not only the internal design of server racks but also the broader architecture and location considerations for data centers. Facilities must be designed to accommodate liquid distribution networks, plumbing, and heat rejection systems. Access to reliable power grids and ample water resources for large-scale cooling becomes an even more critical factor in site selection. Alloy’s ability to develop high-performance, durable cold plates directly contributes to the viability of deploying next-generation AI infrastructure. The company’s early engagement with "all the big names" in the data center industry, though unspecified, signals strong market validation for its technology. Initially, Alloy had designed its technology for a widely used aluminum alloy, but sensing the urgent need from data centers, it strategically adapted its process to work with copper, a material renowned for its excellent thermal conductivity and corrosion resistance—ideal properties for liquid cooling applications. This pivot, according to Forsyth, led to an explosive market response upon the product’s announcement.

Environmental Footprint and Sustainability Challenges

Beyond operational efficiency and performance, the escalating power demands of AI and the associated cooling requirements raise significant environmental and sustainability concerns. Data centers already consume a substantial amount of global electricity, and the rapid expansion of AI threatens to exacerbate this footprint. Inefficient cooling systems not only waste energy but also contribute to increased carbon emissions. Furthermore, many cooling technologies, particularly those relying on evaporative cooling towers, consume vast quantities of water, placing additional strain on local water resources, especially in drought-prone regions.

Innovations in cooling, such as Alloy’s high-efficiency cold plates, play a crucial role in mitigating these environmental impacts. By enabling more effective heat dissipation, these technologies can reduce the overall energy consumption of data centers, thereby lowering their carbon footprint. More efficient cooling also allows for higher computational density within existing infrastructure, potentially reducing the need for new data center construction and the associated material and land use. The industry is under increasing pressure from regulators, investors, and the public to adopt more sustainable practices, making advanced cooling solutions not just an operational necessity but also a critical component of corporate social responsibility.

The Road Ahead for Advanced Cooling

The journey to effectively cool the future of AI is far from over. While solutions like stack-forged copper cold plates represent a significant leap forward, the industry continues to explore a diverse range of advanced thermal management techniques. Immersion cooling, where entire servers are submerged in dielectric fluids, and two-phase cooling, which leverages the latent heat of vaporization for highly efficient heat transfer, are also gaining traction. Each approach presents its own set of advantages and challenges in terms of cost, scalability, and maintainability.

The critical insight from Alloy Enterprises is the realization that effective cooling must extend beyond just the primary processing units. As total rack power approaches unprecedented levels, every component that generates heat—from the main GPUs to the smallest memory modules—requires dedicated thermal management. The race to develop and deploy these cutting-edge cooling technologies is not merely about optimizing performance; it is about enabling the continued growth and innovation of artificial intelligence itself. Without scalable, efficient, and reliable cooling solutions, the ambitious visions for AI’s future may well be curtailed by the fundamental laws of thermodynamics. Companies like Alloy Enterprises are at the forefront of this silent battle, working to ensure that the AI revolution can continue to thrive without overheating its foundational infrastructure.