Google is dramatically enhancing its ubiquitous mapping platform, Google Maps, through a comprehensive integration of its advanced generative artificial intelligence model, Gemini. This significant upgrade aims to redefine the user experience by offering a more intelligent, conversational, and hands-free approach to navigation and local discovery. The initiative represents a pivotal moment in the evolution of digital mapping services, moving beyond static directions to a dynamic, AI-powered co-pilot for daily commutes and grand adventures alike.

A Legacy of Innovation: Google Maps’ Journey to AI-Powered Intelligence

The journey of Google Maps began nearly two decades ago, launching in 2005 with a simple yet revolutionary promise: to provide accessible, digital maps to the masses. Initially a web-based service, it quickly expanded to mobile devices, fundamentally altering how people navigate, explore, and interact with the physical world. Over the years, Google Maps has evolved from a basic directional tool into a sophisticated ecosystem, incorporating satellite imagery, Street View, public transit information, business listings, real-time traffic updates, and personalized recommendations.

This evolution has been consistently driven by advancements in data collection, algorithmic processing, and, increasingly, artificial intelligence. Early AI applications in Maps focused on optimizing search results, improving estimated arrival times by analyzing traffic patterns, and personalizing recommendations based on user history. The introduction of voice commands and the Google Assistant marked a preliminary step towards hands-free interaction, allowing users to ask for directions or find nearby points of interest without touching their device. However, these interactions were largely command-based and less fluid than natural human conversation.

The past year has seen a more aggressive push towards integrating generative AI capabilities into Google Maps. This included features designed to improve discovery, allowing users to ask more open-ended questions about places, leading to more nuanced search results. The current integration of Gemini, Google’s multimodal AI model, signifies a qualitative leap. Gemini, known for its ability to understand and operate across various types of information—text, code, audio, image, and video—brings a new level of conversational fluency and contextual understanding to the mapping experience. It transcends simple query-response systems, enabling a more natural, multi-turn dialogue that mimics human interaction, a critical advancement for hands-free operation, particularly in driving scenarios.

Conversational AI: Your Intelligent Co-Pilot on the Road

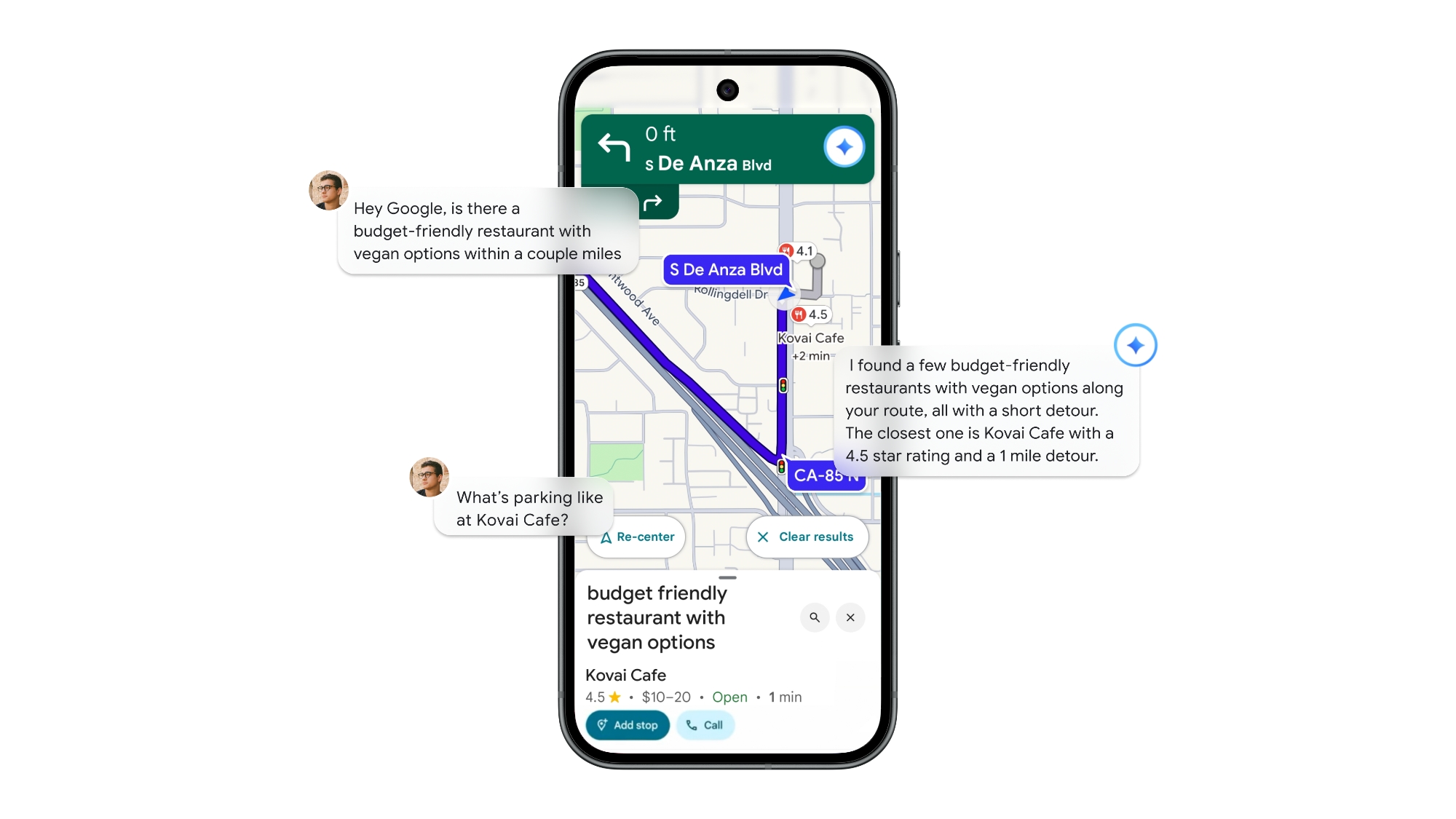

One of the most impactful aspects of the Gemini integration is its ability to serve as an intelligent, conversational co-pilot while driving. Users can now engage with Gemini directly within the Maps interface, asking complex, layered questions and receiving real-time, contextually relevant answers without diverting their attention from the road. This capability extends far beyond basic navigation queries.

Imagine a scenario where a driver is on a long trip and starts to feel hungry. Instead of fumbling with their phone or trying to articulate a precise search query, they can simply ask Gemini: "Is there a budget-friendly restaurant with vegan options along my route, something within a couple of miles?" Following up, they might ask, "What’s parking like there?" or "Are there any reviews mentioning outdoor seating?" Gemini’s ability to process these multi-part questions in a continuous conversation represents a significant leap from previous voice assistants. It understands context, remembers previous parts of the conversation, and provides detailed, filtered results, making spontaneous stops or planning detours significantly easier and safer.

Beyond immediate navigation needs, Gemini can also address a wider range of informational queries. Users can ask for updates on sports scores, current news headlines, or even perform personal tasks like adding an event to their calendar, all through voice commands while their hands remain on the wheel. This integration blurs the lines between a dedicated navigation app and a comprehensive digital assistant, transforming the driving experience into a more informed and productive one. The underlying natural language processing capabilities of Gemini are designed to minimize frustration, reduce the need for precise phrasing, and deliver relevant information efficiently, thereby reducing cognitive load on the driver.

Revolutionizing Navigation with Landmark-Based Directions

For years, navigation apps have relied primarily on distance and cardinal directions ("turn right in 500 feet"). While effective, this approach can be challenging in unfamiliar areas, dense urban environments, or when street signs are obscured or absent. Google Maps is now addressing this common pain point by combining Gemini’s intelligence with its vast Street View data to offer landmark-based navigation instructions.

Instead of a generic distance prompt, users will now receive directions like, "Turn right after the Shell gas station" or "Take the next left past the historic library." This seemingly simple change dramatically improves clarity and reduces the likelihood of missed turns, particularly for visual learners or those less comfortable with abstract distance measurements. The system works by leveraging Gemini to cross-reference information about over 250 million places with high-resolution Street View images. This sophisticated analysis identifies prominent, easily recognizable landmarks—such as gas stations, restaurants, unique buildings, or specific storefronts—that are visible from the driver’s perspective just before a turn.

The impact of this feature extends beyond mere convenience. It taps into how humans naturally orient themselves in space. When asking for directions from a local, one often hears references to recognizable points. By mimicking this intuitive human method, Google Maps aims to make navigation less stressful and more intuitive. This advancement is particularly beneficial for tourists exploring new cities, delivery drivers navigating complex routes, or anyone driving in an area without clear street signage. It represents a more natural and reassuring guidance system, enhancing both safety and user confidence.

Dynamic Traffic Management and Proactive Incident Alerts

Real-time traffic information has long been a cornerstone of Google Maps’ utility, saving countless hours for commuters and travelers. The integration of Gemini further refines this critical function by streamlining incident reporting and enhancing proactive alerts. Drivers can now report traffic incidents, such as accidents, construction zones, or stalled vehicles, using natural voice commands through Gemini. This hands-free method makes it safer and more convenient for users to contribute to the collective traffic data, potentially leading to more accurate and up-to-the-minute information for everyone.

Moreover, Maps will leverage Gemini to provide more proactive and intelligent notifications about disruptions ahead on the route. This goes beyond simply showing traffic jams; it involves anticipating potential delays and offering alternative routes with greater context. For instance, if a major event is causing gridlock near a user’s destination, Gemini could provide an alert not just about the traffic itself, but also suggest parking alternatives or public transport options, offering a more holistic solution rather than just rerouting.

This enhancement builds upon Google Maps’ history of leveraging crowd-sourced data, anonymized location information, and predictive analytics to deliver highly accurate traffic predictions. By making the input process more seamless and the output more intelligent, Gemini aims to create a more responsive and adaptive navigation system, helping users avoid frustrating delays and optimize their travel time more effectively.

Augmented Discovery: Interacting with Your Surroundings through AI

Beyond in-car navigation, Gemini’s integration with Google Maps, specifically through Google Lens, opens up new avenues for augmented discovery of the physical world. Users can now point their smartphone camera at a landmark, restaurant, or any point of interest in their surroundings and ask Gemini questions about it. For example, pointing the camera at a historical building, one could ask, "What is this place and why is it popular?" or at a restaurant, "Is this a good place for lunch with kids?"

This feature leverages the visual recognition capabilities of Google Lens combined with Gemini’s vast knowledge base and conversational understanding. It transforms the smartphone camera into an intelligent exploration tool, providing instant, contextual information about the world around the user. This is particularly transformative for tourists, urban explorers, or anyone with a casual curiosity about their environment. It makes information about local businesses, historical sites, or natural attractions immediately accessible and interactive, blurring the lines between the digital and physical realms.

The cultural impact of such a feature is profound. It fosters a deeper engagement with one’s surroundings, encouraging curiosity and spontaneous learning. For local businesses, it enhances discoverability, allowing potential customers to gain immediate insights without needing to manually search. This creates a more dynamic and informative way for individuals to interact with their local environment, transforming every walk or drive into a potential learning or discovery experience.

Market, Social, and Cultural Implications

The deep integration of Gemini into Google Maps marks a significant paradigm shift, not just for Google but for the broader tech and automotive industries. From a market perspective, this move solidifies Google Maps’ position as a leader in location-based services, setting a new benchmark for competitors. It pushes the boundaries of what consumers expect from a navigation app, moving it from a functional utility to an indispensable intelligent companion.

Socially, the emphasis on hands-free interaction and natural language processing has considerable implications for driver safety. By minimizing the need for manual input and visual interaction with the device, the risk of distracted driving can be significantly reduced. This also enhances accessibility for users with various physical limitations, making navigation tools more inclusive. The ability to ask complex questions conversationally simplifies decision-making on the go, reducing stress and improving the overall travel experience.

Culturally, this development reflects a growing reliance on AI assistants in daily life. As these technologies become more seamless and intuitive, they are increasingly woven into the fabric of human interaction with technology. The "co-pilot" metaphor is becoming a reality, where AI doesn’t just execute commands but anticipates needs, offers suggestions, and participates in a more natural dialogue. This trend could accelerate the adoption of AI in other areas, from home automation to personal productivity.

For businesses, especially those in the hospitality and retail sectors, the enhanced discoverability features mean an even greater emphasis on maintaining accurate and compelling online profiles. The ability of Gemini to quickly surface detailed information about nearby establishments means that up-to-date menus, hours, reviews, and amenities will be crucial for attracting spontaneous visitors.

Rollout and Future Outlook

Google has outlined a phased rollout for these advanced Gemini features. The new Gemini navigation capabilities, including conversational queries and task management, are slated to become available on both iOS and Android devices in the coming weeks, with support for Android Auto expected soon thereafter. Proactive traffic alerts are initially rolling out to Android users in the U.S., while the innovative landmark-based navigation will first be available exclusively in the U.S. on both iOS and Android platforms. The Google Lens integration with Gemini for augmented discovery is also expected to launch in the U.S. later this month. This strategic, region-specific rollout likely allows Google to gather initial user feedback and fine-tune the AI models before a broader international deployment.

Looking ahead, the integration of Gemini into Google Maps is not an endpoint but a stepping stone. As AI models become more sophisticated, we can anticipate even deeper personalization, predictive capabilities that anticipate user needs before they are articulated, and more seamless integration with other smart devices and services. The vision is clear: to create an intelligent, adaptive, and truly intuitive digital guide that understands the complexities of the physical world and the nuances of human intent, making every journey more efficient, enjoyable, and insightful. This significant update positions Google Maps at the forefront of location intelligence, signaling a future where AI acts as an ever-present, invaluable companion for exploring the world.