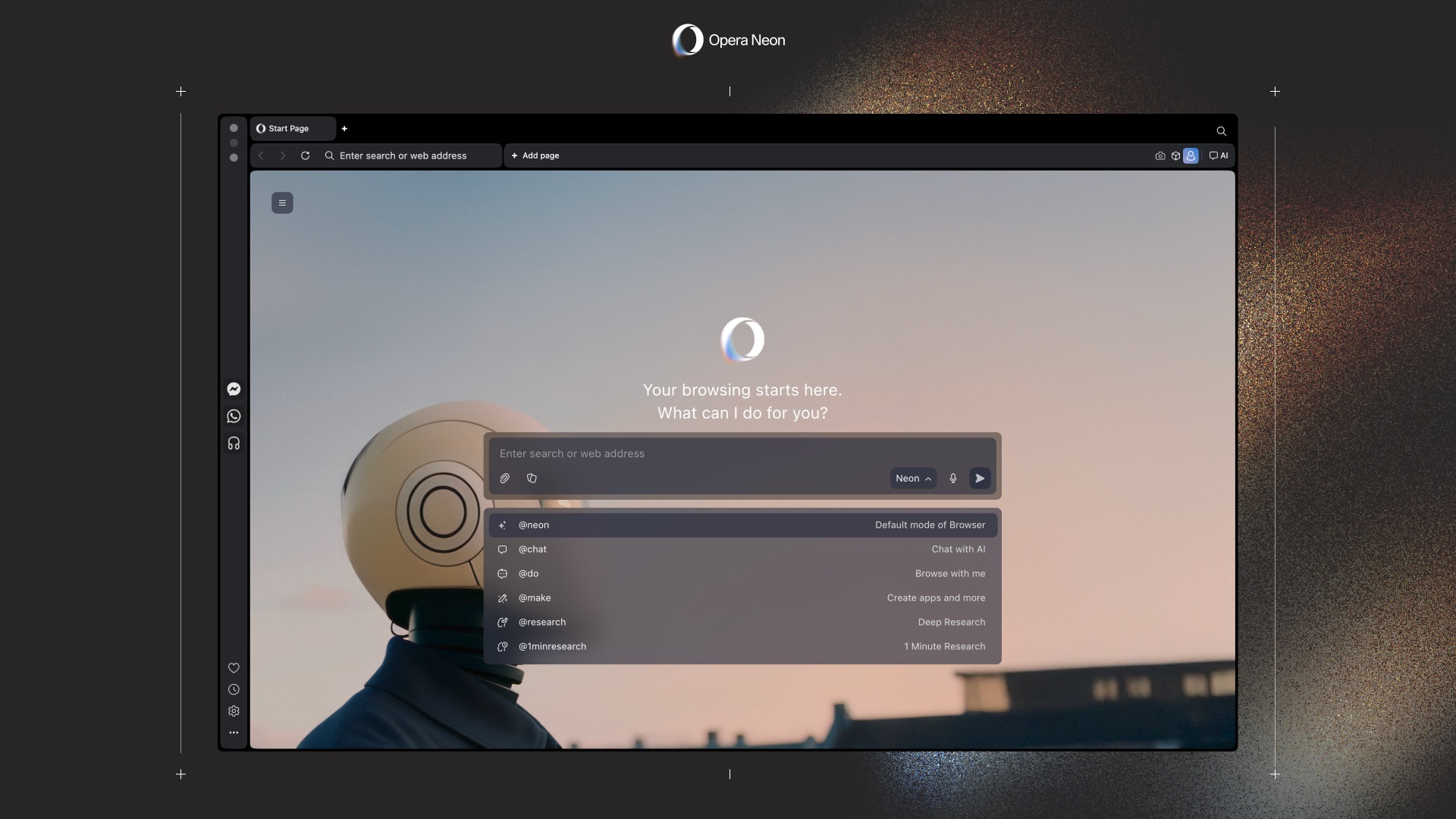

Google has announced a pivotal organizational change in its pursuit of artificial intelligence supremacy, appointing Amin Vahdat to the newly created role of Chief Technologist for AI Infrastructure. This strategic elevation places Vahdat directly under the purview of CEO Sundar Pichai, signaling the paramount importance Google now assigns to the underlying technological bedrock supporting its expansive AI endeavors. The move, initially reported by Semafor and subsequently confirmed by TechCrunch, underscores a deepening commitment to infrastructure as a key differentiator in the fiercely competitive AI landscape, a sector where Google’s parent company, Alphabet, projects capital expenditures to significantly increase beyond the anticipated $93 billion by the close of 2025.

The Escalating AI Infrastructure Arms Race

The current era is often characterized as an "AI arms race," with technology giants and innovative startups vying to develop and deploy the most powerful and efficient artificial intelligence models. At the heart of this competition lies not just algorithmic brilliance but also the sheer computational power and sophisticated infrastructure required to train and run these complex systems. Large Language Models (LLMs) and other advanced AI applications demand unprecedented levels of processing capability, vast data storage, and high-speed communication networks. This burgeoning demand has catalyzed a frantic investment cycle across the industry, with companies like Google, Microsoft, Amazon, and Meta pouring billions into specialized hardware, data centers, and global networking solutions.

Historically, Google has been at the forefront of large-scale computing, pioneering technologies that enabled the indexing of the internet and the creation of global services like Search, Gmail, and YouTube. As AI began to emerge as a transformative force, Google recognized early on that conventional computing architectures might not suffice. This realization spurred internal initiatives to design custom hardware and software tailored specifically for machine learning workloads, laying the groundwork for the infrastructure that Vahdat has been instrumental in building. His promotion now formalizes and elevates the strategic imperative of this foundational work, recognizing that the future of AI is inextricably linked to its physical and logical infrastructure.

Amin Vahdat: A Legacy of Scale and Efficiency

Amin Vahdat is not a newcomer to Google’s formidable engineering operations; his influence has been quietly shaping the company’s technological backbone for a decade and a half. A computer scientist with a Ph.D. from UC Berkeley, Vahdat’s career began with foundational research at Xerox PARC in the early 1990s, a hub of innovation that birthed many modern computing paradigms. Before joining Google in 2010 as an engineering fellow and Vice President, he held prominent academic positions as an associate professor at Duke University and later as a professor and SAIC Chair at UC San Diego. His academic record is robust, evidenced by approximately 395 published papers, consistently focusing on the intricate challenges of achieving efficiency and scalability in massive computing environments.

Throughout his tenure at Google, Vahdat has been the architect behind many of the critical systems that empower the company’s global operations and, increasingly, its AI initiatives. His expertise bridges the gap between theoretical computer science and practical, large-scale system deployment, a rare and invaluable combination in today’s technology landscape. His deep understanding of networking, distributed systems, and custom hardware has positioned him as a central figure in Google’s strategic response to the computational demands of modern AI.

Pioneering Google’s AI Hardware and Network Ecosystem

Vahdat’s contributions span several critical domains within Google’s infrastructure, each vital to its AI ambitions:

-

Tensor Processing Units (TPUs): A cornerstone of Google’s AI strategy, TPUs are custom-designed application-specific integrated circuits (ASICs) specifically engineered for accelerating machine learning workloads. Google developed TPUs to overcome the limitations of general-purpose CPUs and GPUs for AI tasks, providing superior performance and energy efficiency for training and inference. Vahdat has been a key figure in the development trajectory of these processors. Just eight months prior to his latest promotion, at Google Cloud Next, he unveiled the seventh generation of these powerful chips, codenamed "Ironwood." He highlighted their staggering capabilities, noting that a single pod comprises over 9,000 chips, collectively delivering 42.5 exaflops of compute power. To put this in perspective, Vahdat stated this was more than 24 times the power of the world’s most potent supercomputer at the time, underscoring the exponential growth in AI computational requirements. He famously noted that "demand for AI compute has increased by a factor of 100 million in just eight years," illustrating the relentless pressure on infrastructure.

-

Jupiter Network: Beyond individual processing units, the ability to seamlessly connect thousands of these components and move vast datasets rapidly is paramount for AI training. Vahdat has been instrumental in orchestrating the development and expansion of Google’s Jupiter network, its proprietary super-fast internal data center network. This network enables all Google servers to communicate at incredible speeds, facilitating the movement of petabytes of data required for distributed AI model training. In a blog post, Vahdat revealed that Jupiter now scales to an astonishing 13 petabits per second, a bandwidth theoretically capable of supporting simultaneous video calls for all 8 billion people on Earth. This unparalleled networking capability is a critical, albeit often unseen, advantage that allows Google to efficiently manage and deploy its massive AI models.

-

Borg Software System: Orchestrating the myriad servers, storage devices, and network components across Google’s sprawling data centers is the Borg software system, the company’s internal cluster management system. Often described as the "brain" coordinating all operations within Google’s infrastructure, Borg intelligently allocates resources, schedules tasks, and ensures high availability and efficiency. Vahdat’s deep involvement in Borg’s evolution has been crucial for optimizing how Google’s AI workloads are managed and executed, ensuring that the immense computational power of TPUs and the bandwidth of Jupiter are utilized effectively.

-

Axion CPUs: Expanding beyond specialized AI accelerators, Google has also ventured into developing its own general-purpose CPUs. Vahdat oversaw the development of Axion, Google’s first custom Arm-based processors designed specifically for data centers. Unveiled last year, Axion aims to provide Google with greater control over its computing stack, optimize performance for diverse workloads, and potentially reduce costs and energy consumption compared to off-the-shelf alternatives. This vertical integration strategy, from custom silicon to network architecture and cluster management software, is a hallmark of Google’s approach to achieving competitive advantage in the AI era.

Strategic Implications and Market Impact

Vahdat’s promotion is not merely an internal reshuffling; it is a clear strategic declaration by Google. It signifies that the company recognizes that leadership in AI hinges as much on superior infrastructure as it does on groundbreaking algorithms. While models like Gemini capture headlines, their existence and performance are utterly dependent on the robust, scalable, and efficient underlying hardware and software systems. By centralizing leadership of this critical area under a seasoned veteran reporting directly to the CEO, Google aims to accelerate innovation, streamline decision-making, and ensure tight integration between its AI research and development efforts and the foundational technology required to bring them to fruition.

The market implications of such a move are significant. It reinforces the notion that the competitive battleground for AI is shifting from purely software-centric innovation to a full-stack approach, where companies design and control every layer from the silicon up. This trend is evident across the industry, with other tech giants also investing heavily in custom AI chips and infrastructure. NVIDIA, a dominant player in AI hardware with its GPUs, faces increasing competition not only from AMD and Intel but also from its own customers, who are becoming self-sufficient in parts of their AI stack.

Furthermore, this appointment reflects the intense "talent war" in the AI space. Top AI researchers and infrastructure engineers command astronomical compensation and are highly sought after. Elevating a long-serving, indispensable figure like Vahdat to the C-suite serves not only to reward his immense contributions but also to solidify his position and ensure his continued leadership within the company. After 15 years of building the very foundation of Google’s AI capabilities, retaining such a linchpin is a strategic imperative.

Looking Ahead: The Future of AI Infrastructure

As AI models continue to grow in complexity and size, the demands on infrastructure will only intensify. The need for even more powerful TPUs, faster networks, and more intelligent resource management systems will drive continuous innovation. Vahdat’s new role positions him at the vanguard of this ongoing evolution, tasked with ensuring that Google’s infrastructure remains ahead of the curve, capable of supporting the next generation of AI breakthroughs.

His appointment underscores a fundamental truth in the digital age: while the applications and services built on technology are what users experience, it is the invisible, intricate, and robust infrastructure beneath them that truly enables their existence and drives progress. Google’s strategic elevation of Amin Vahdat is a testament to this principle, signaling a resolute commitment to building the most advanced and efficient foundation for its AI-powered future.